GRACENOTE: FROM SPACE TO SOUND

wearable design / user research / sensors

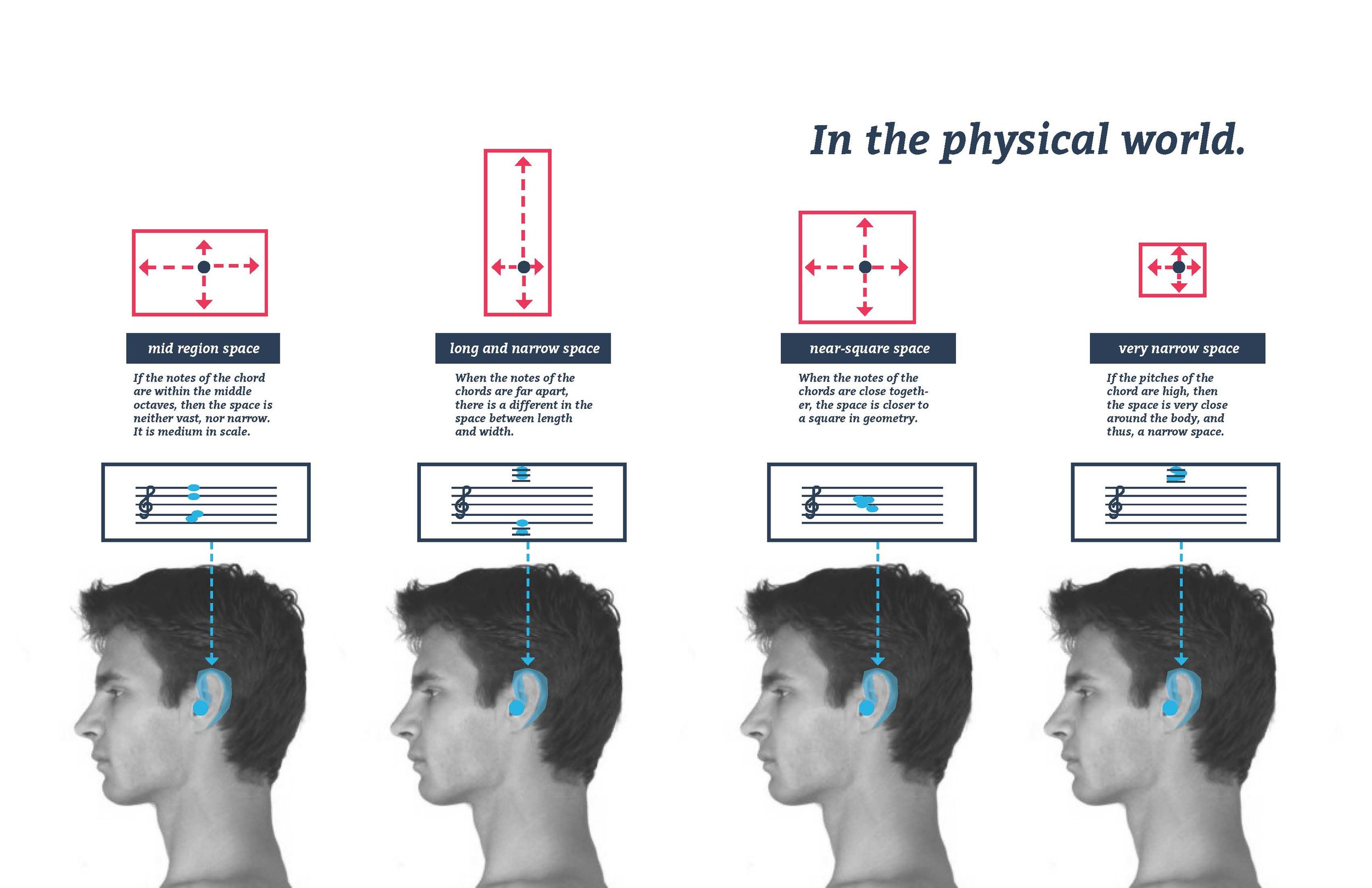

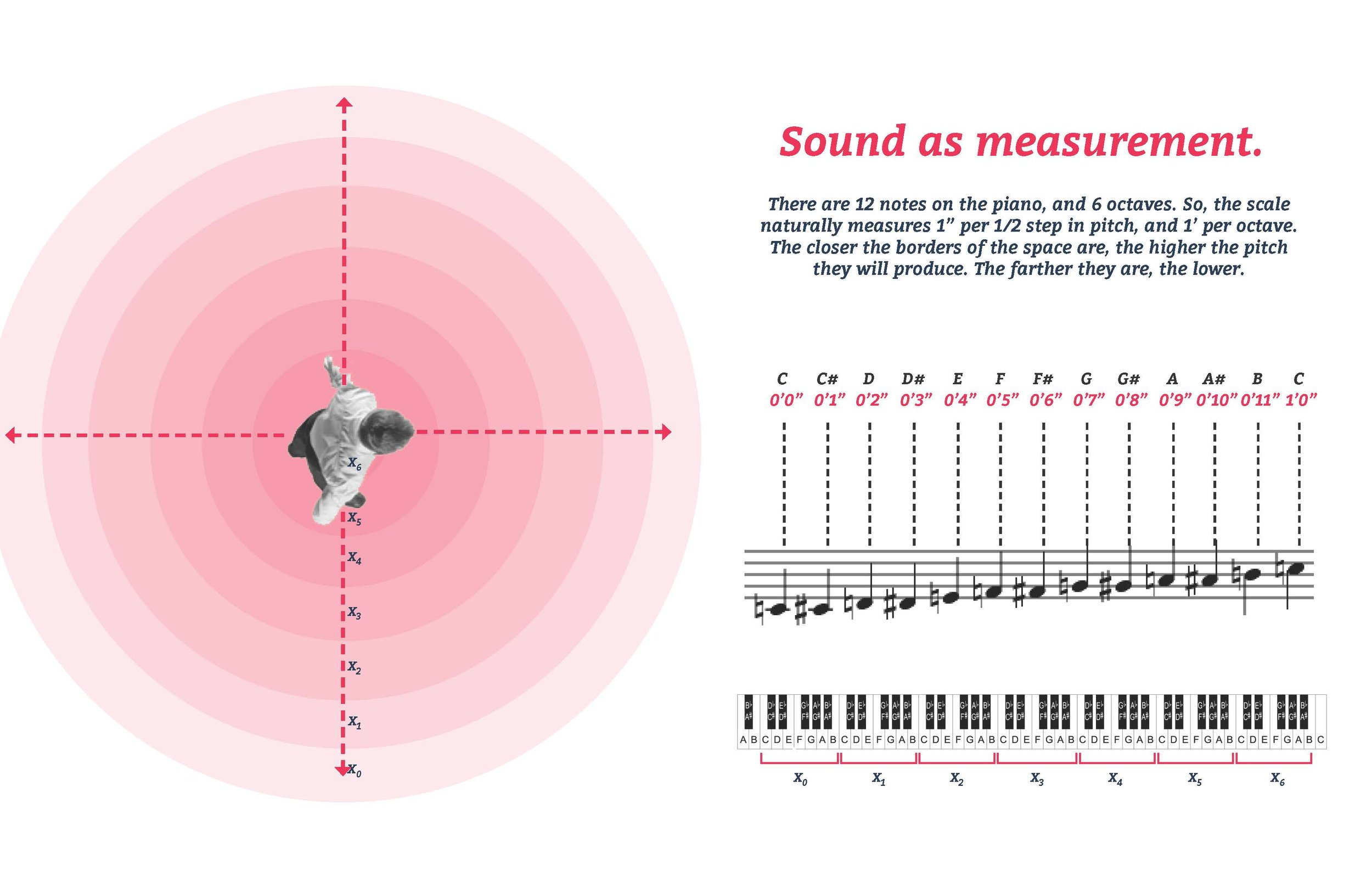

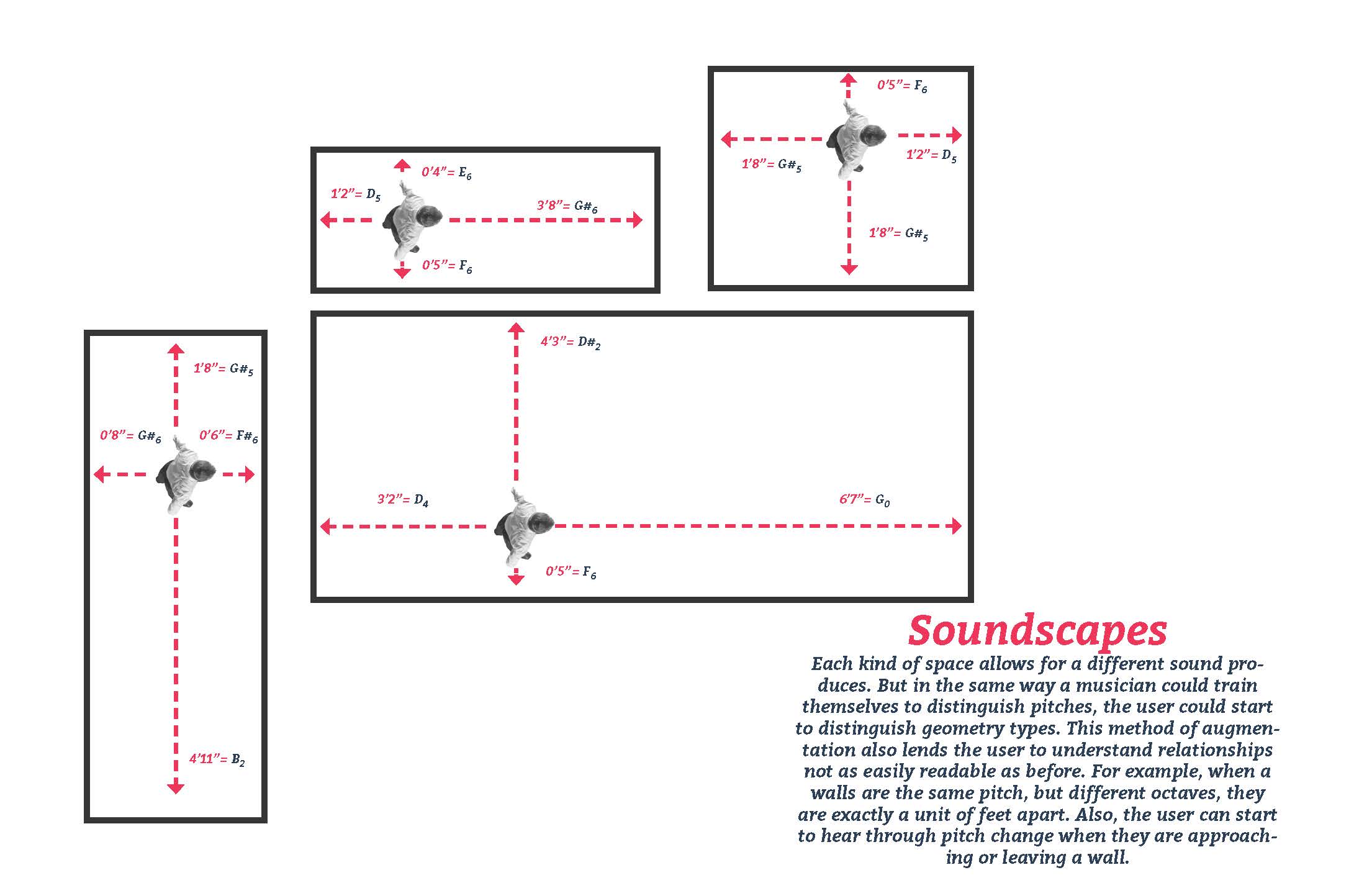

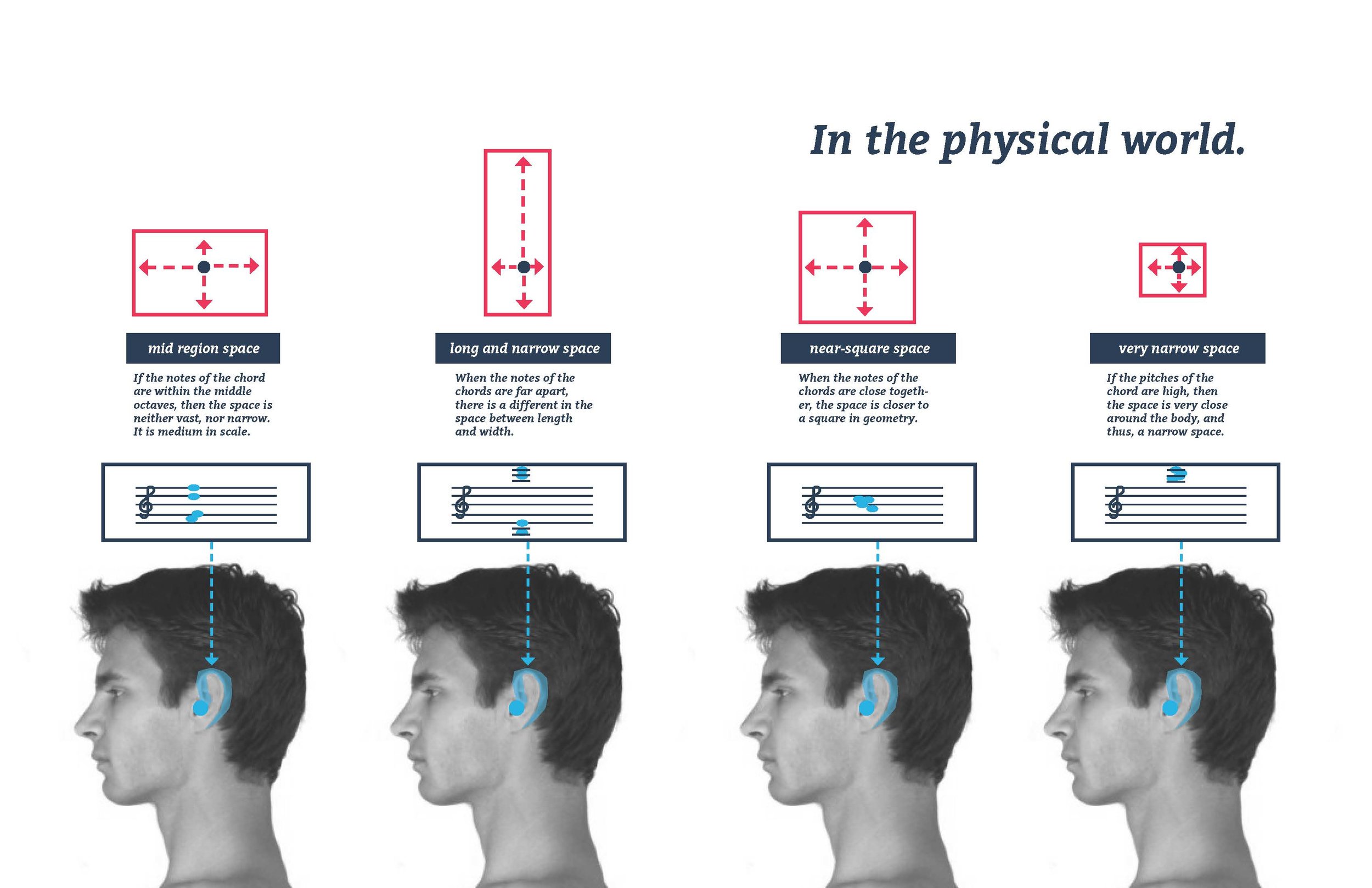

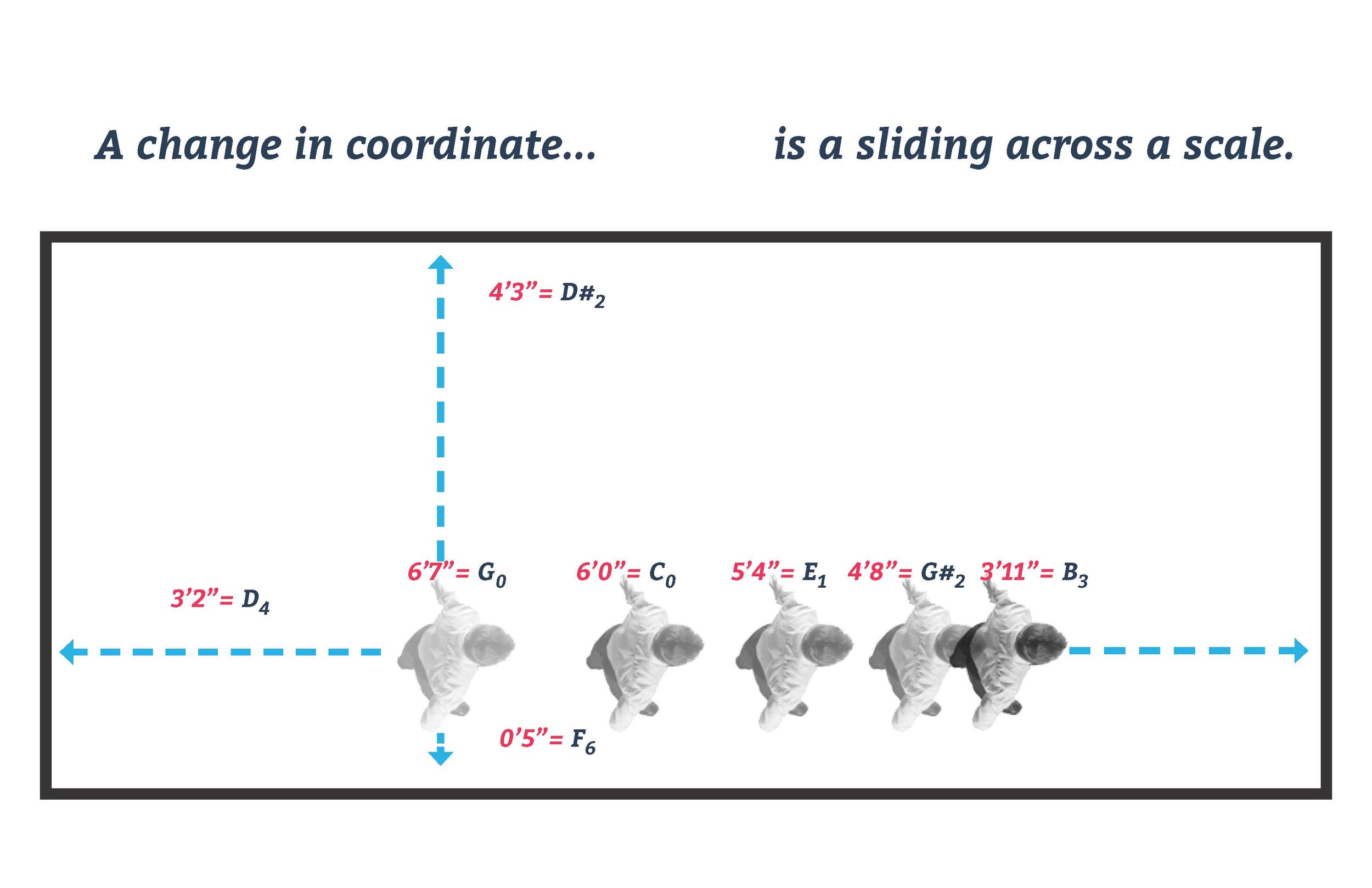

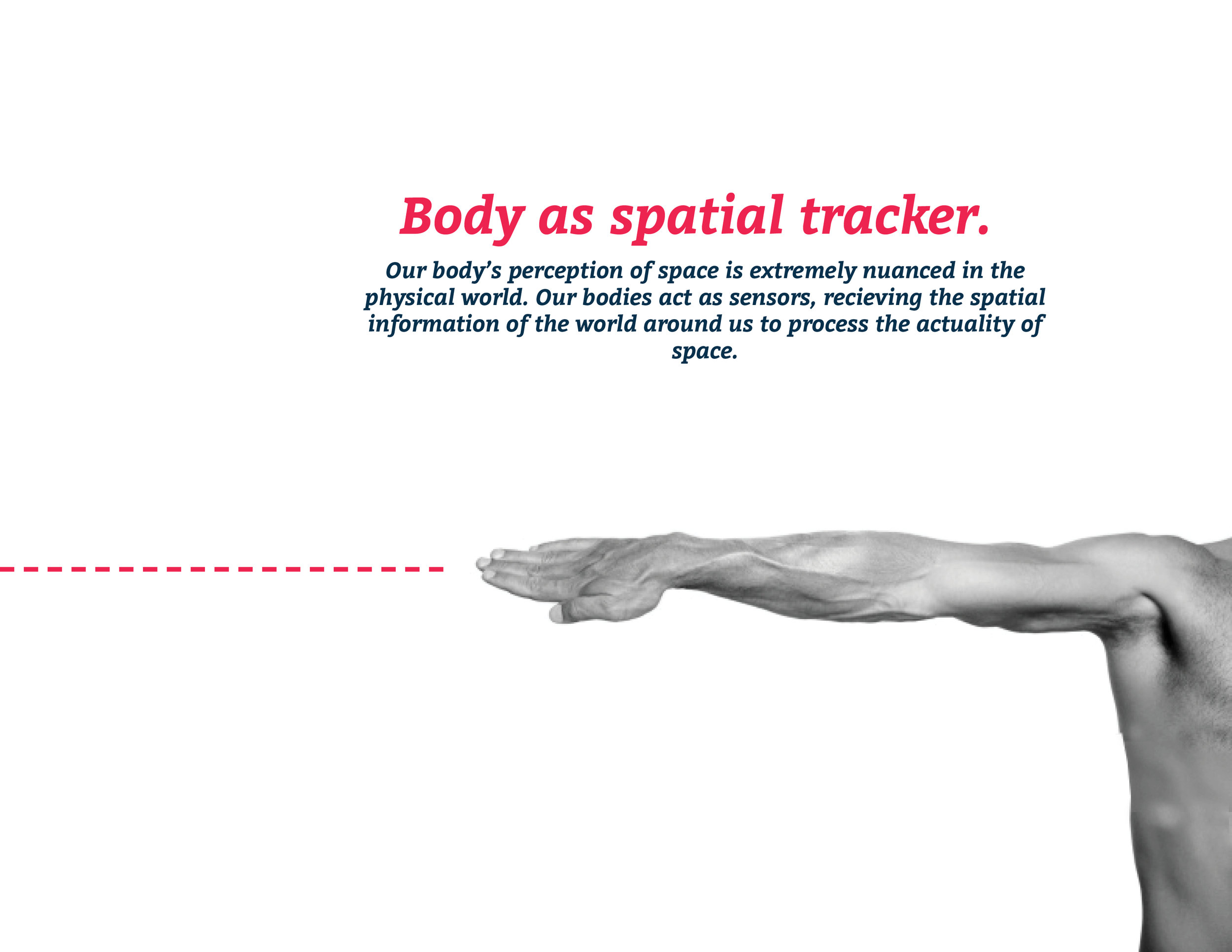

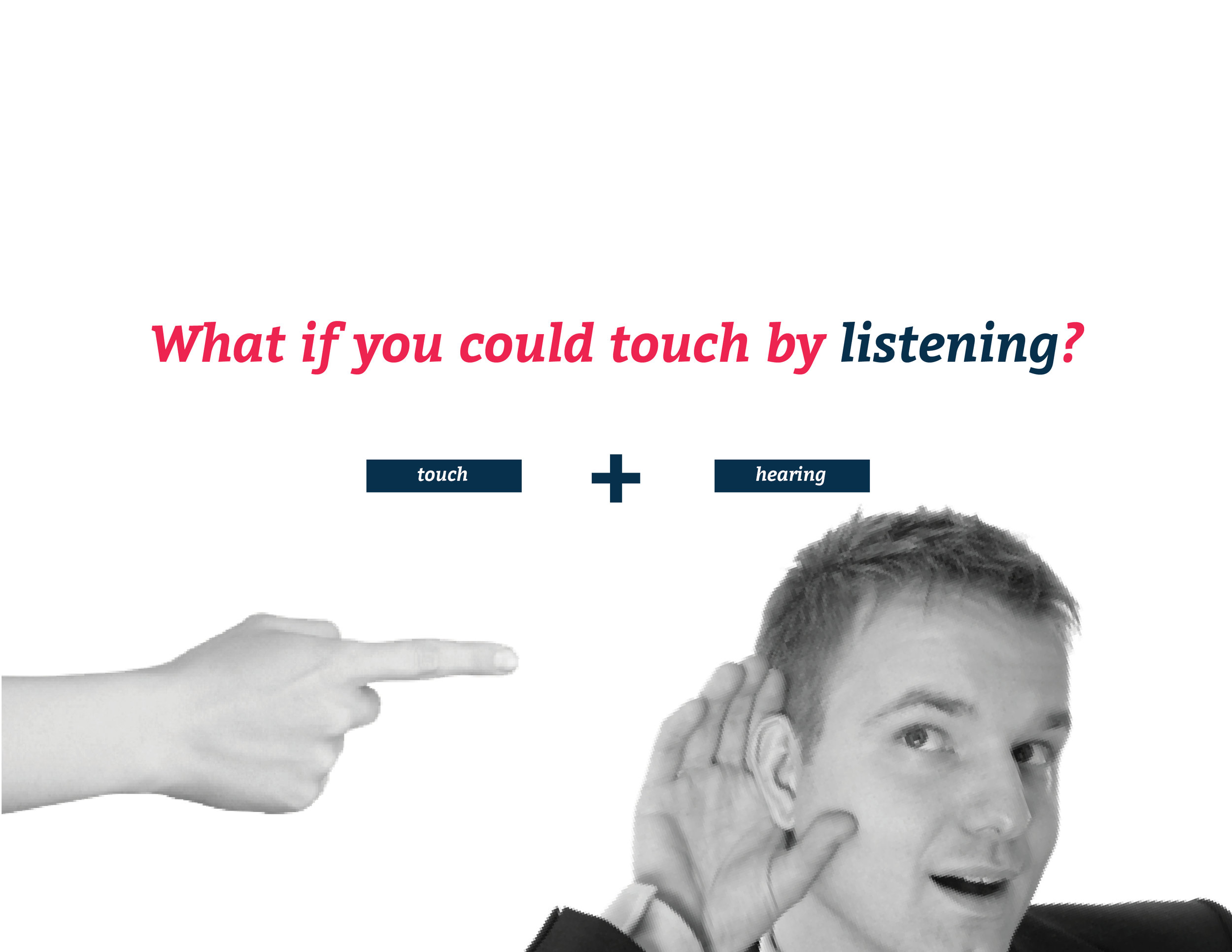

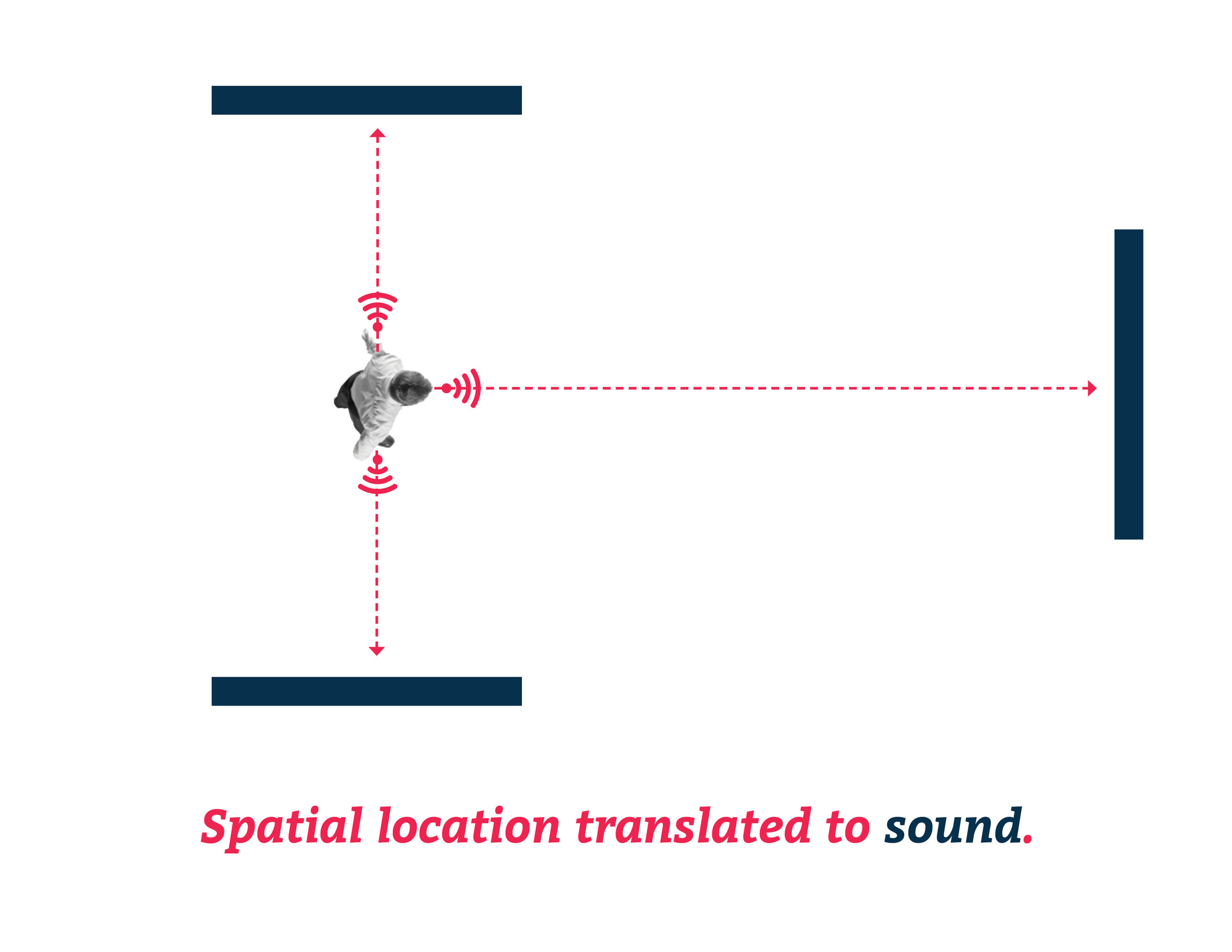

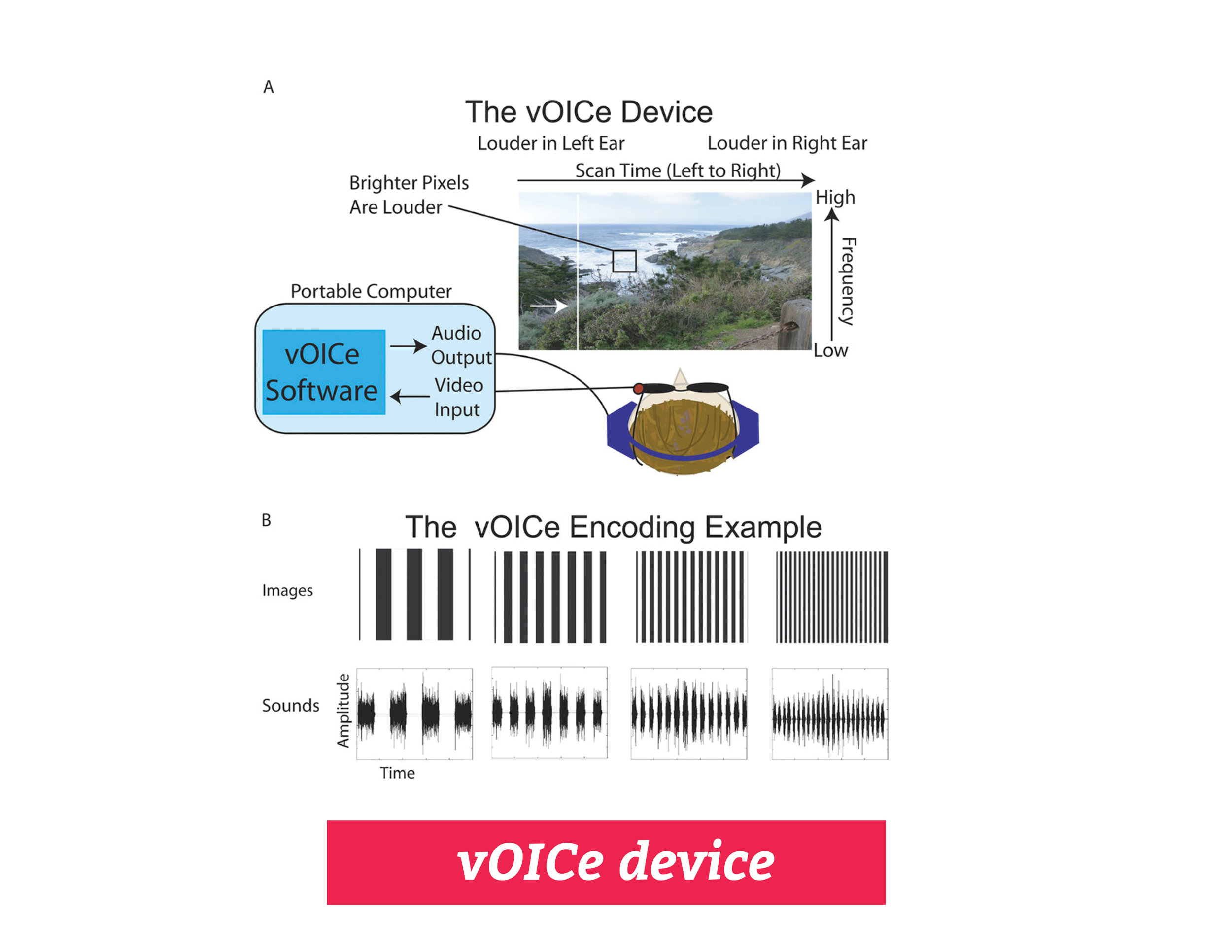

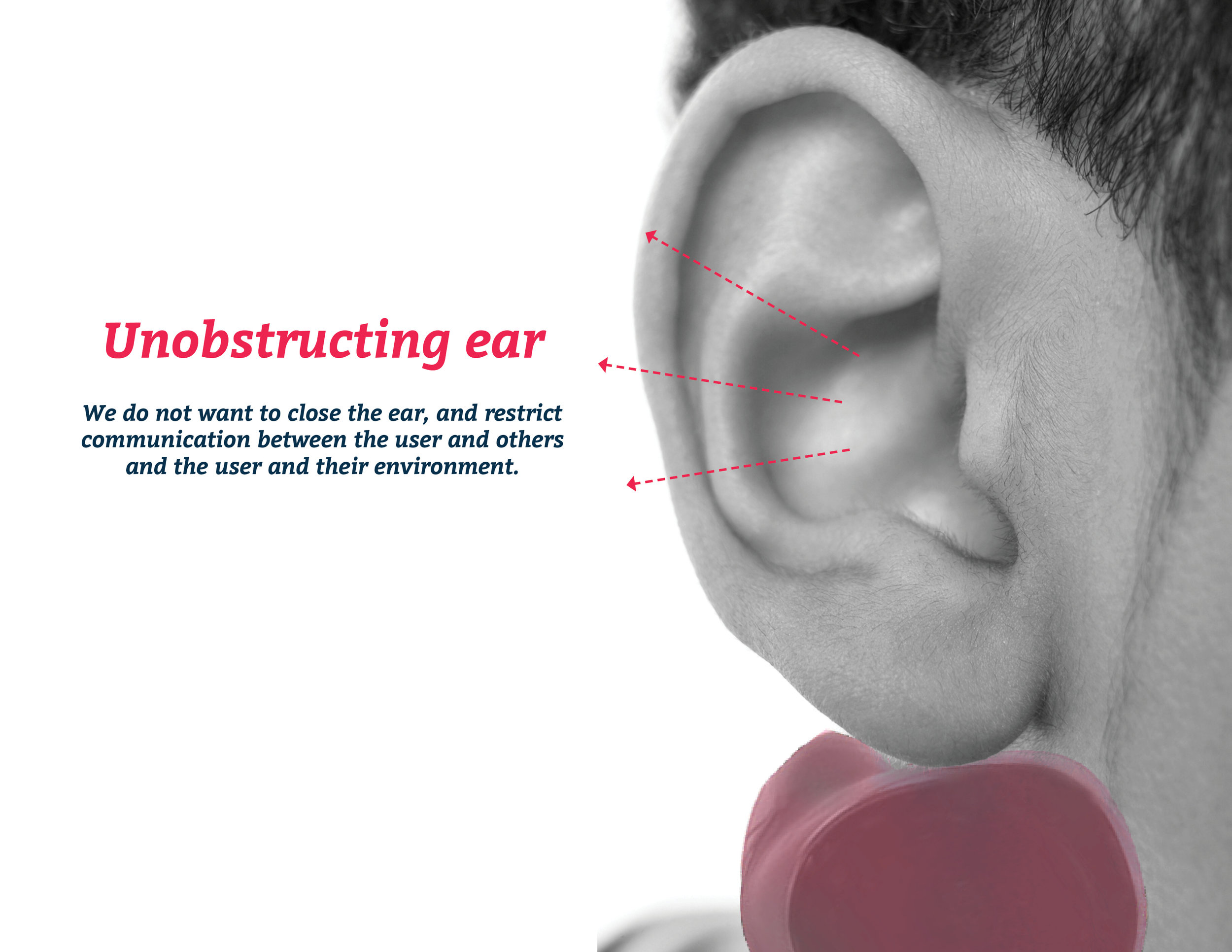

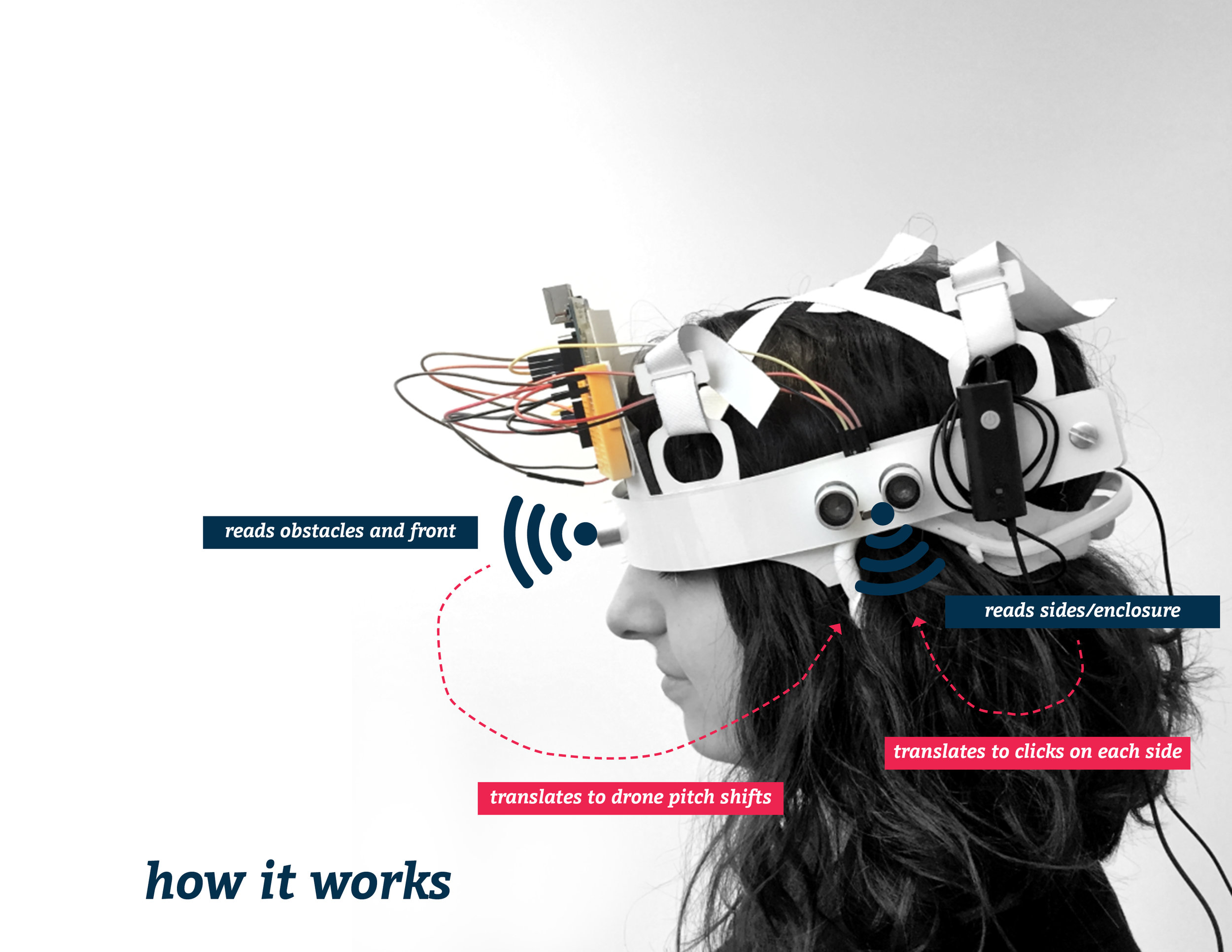

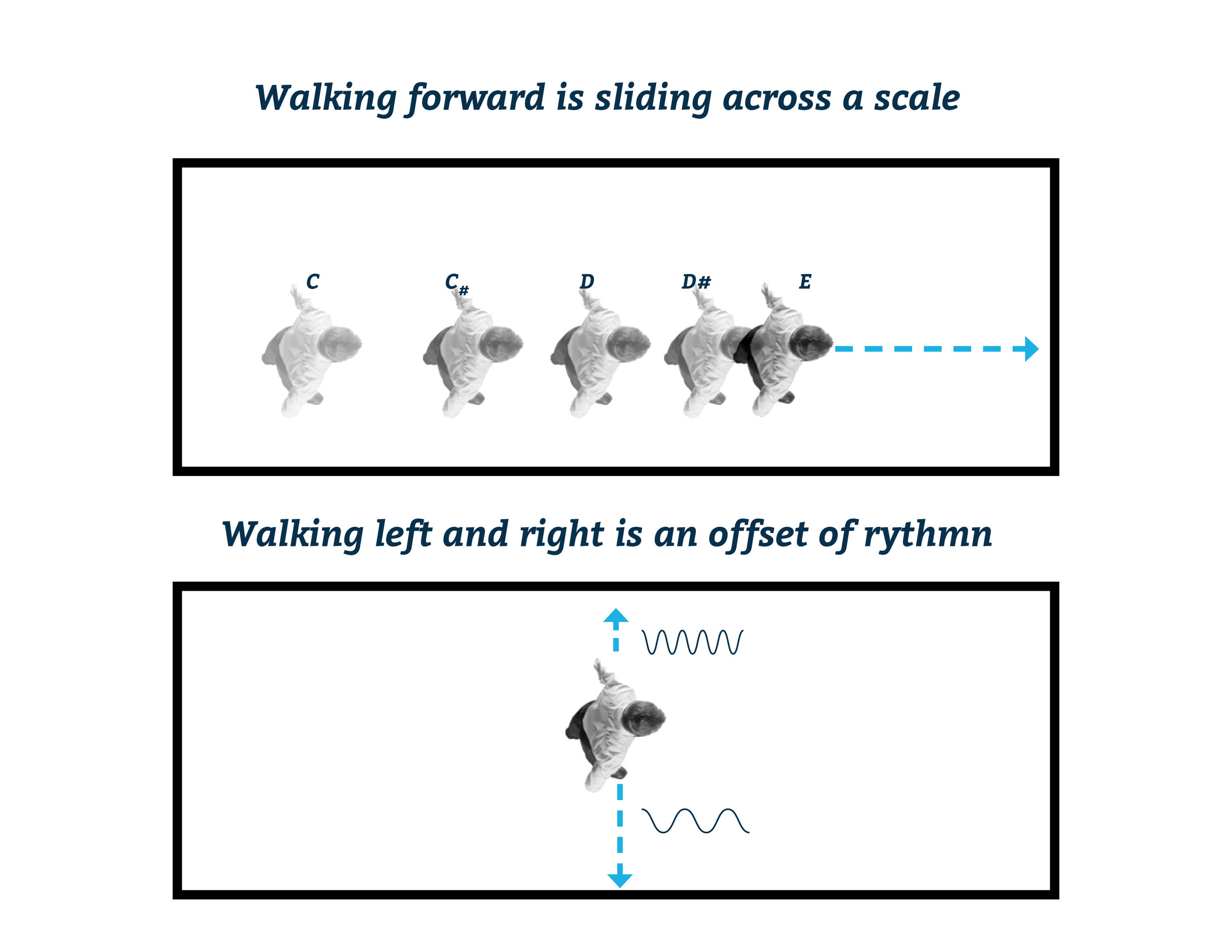

Gracenote is a wearable that allows the augmentation of spatial data to be interpreted through colors of sound chords. Spatial distance data points are recorded through sensors and interpreted as measured music. Understanding space through pitch.

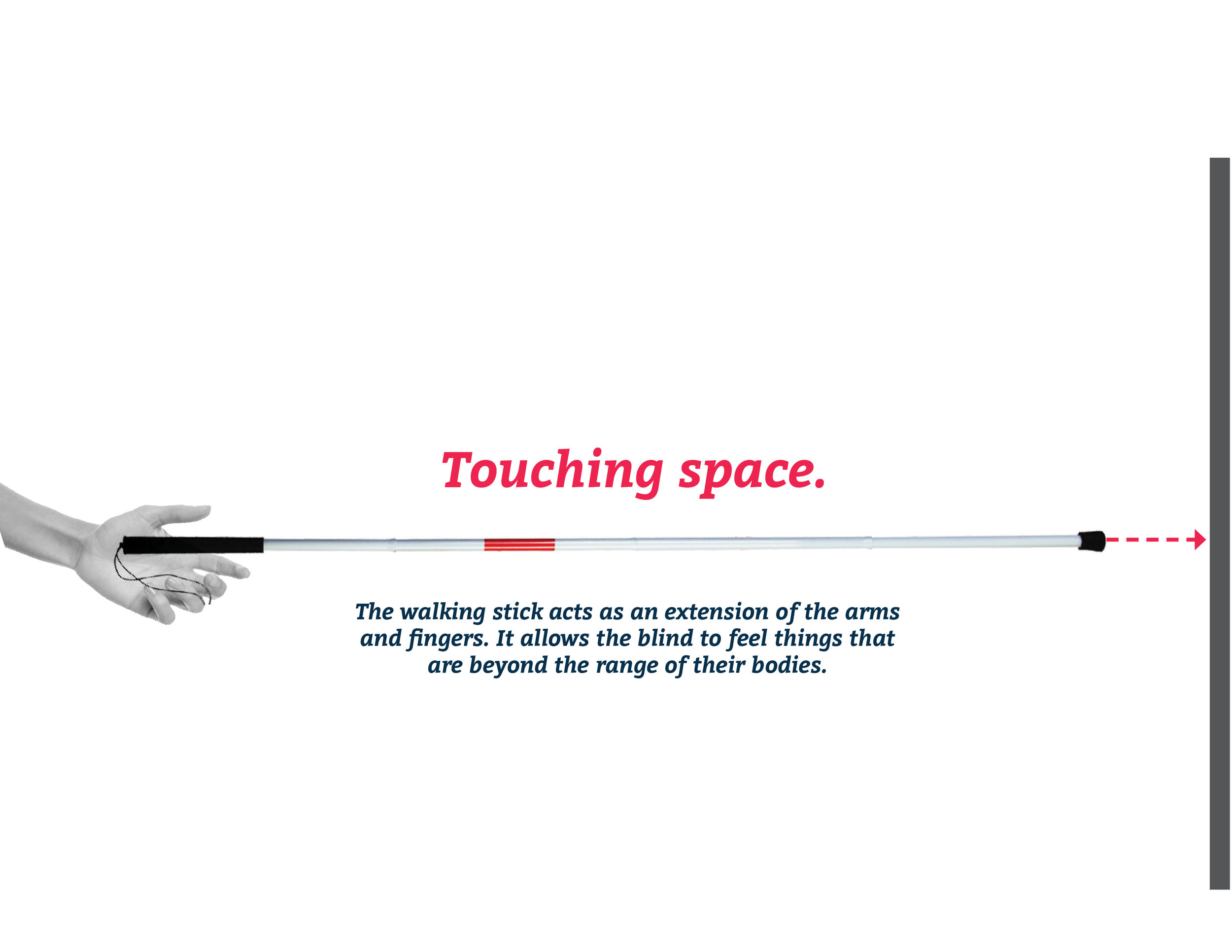

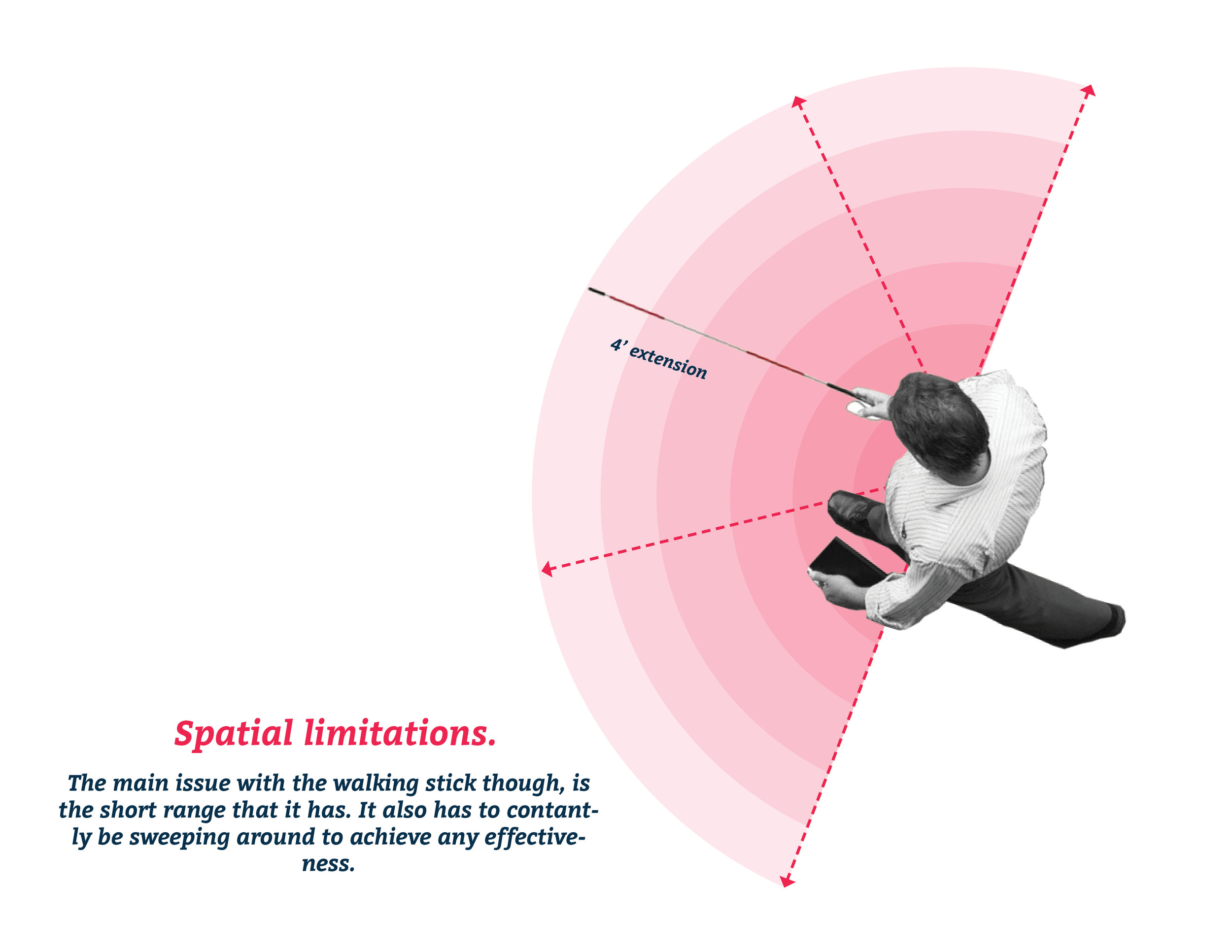

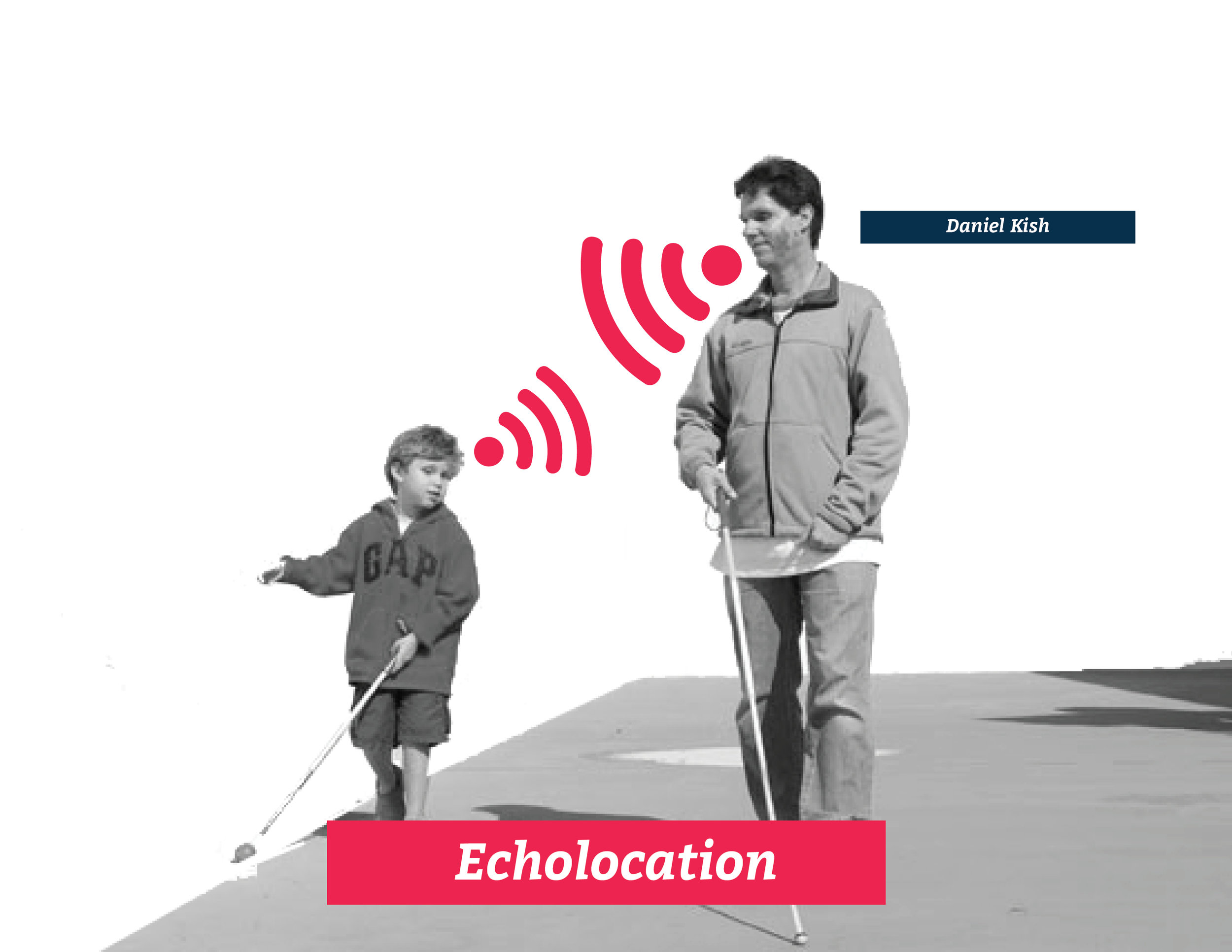

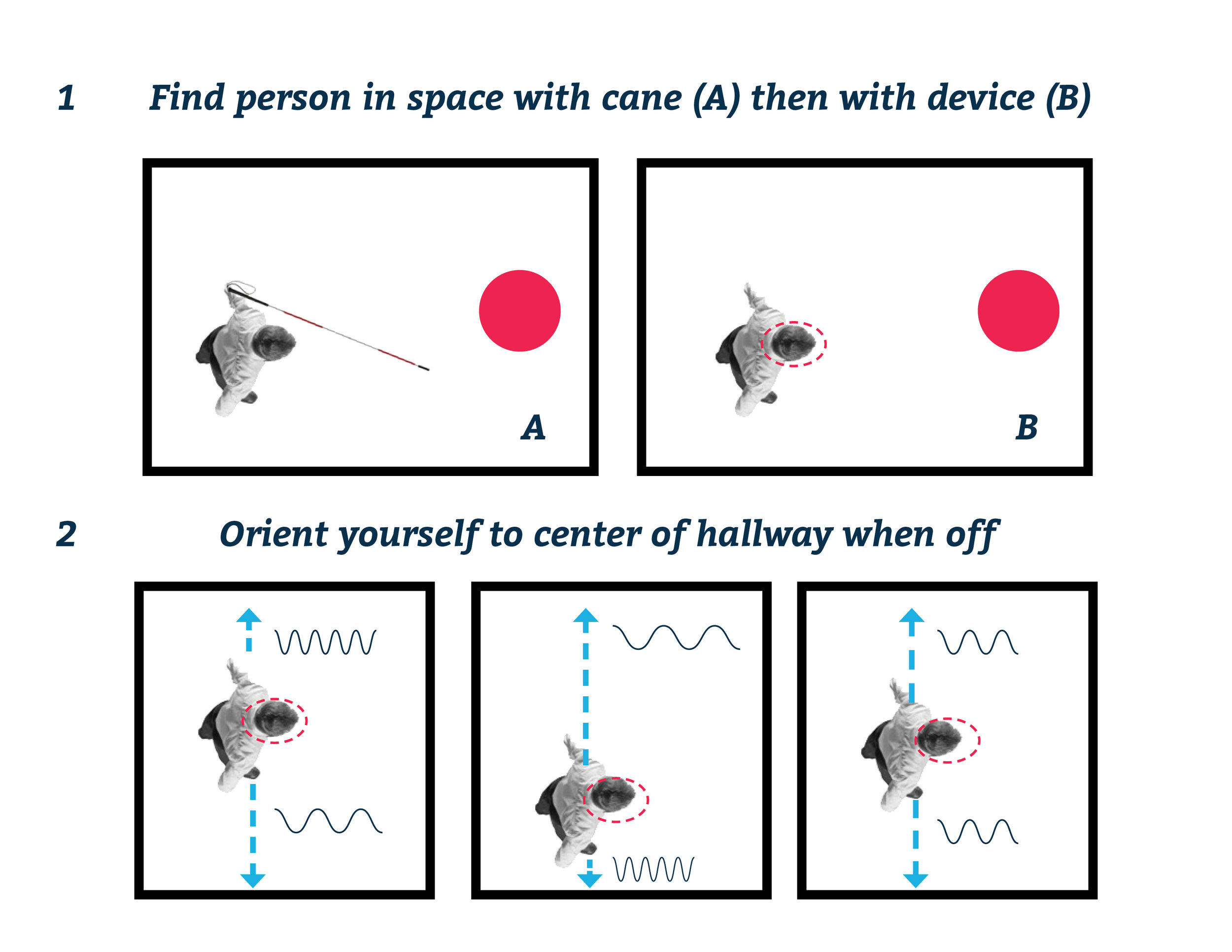

Despite decades of improvement in electronics, there has yet to be a modern device for the blind as ubiquitous as the cane. Indeed, the simple cane is an effective prosthetic: its light weight exacts minimal metabolic cost to the user, the richness of perceptible information conveyed through the device increases with experience, and perhaps most importantly, it is universally accepted sign of blindness. This creates a 2-way communication from the prosthetic: the signal to the outside world can bring a sense of comfort while also becoming an additional source of sensory information.

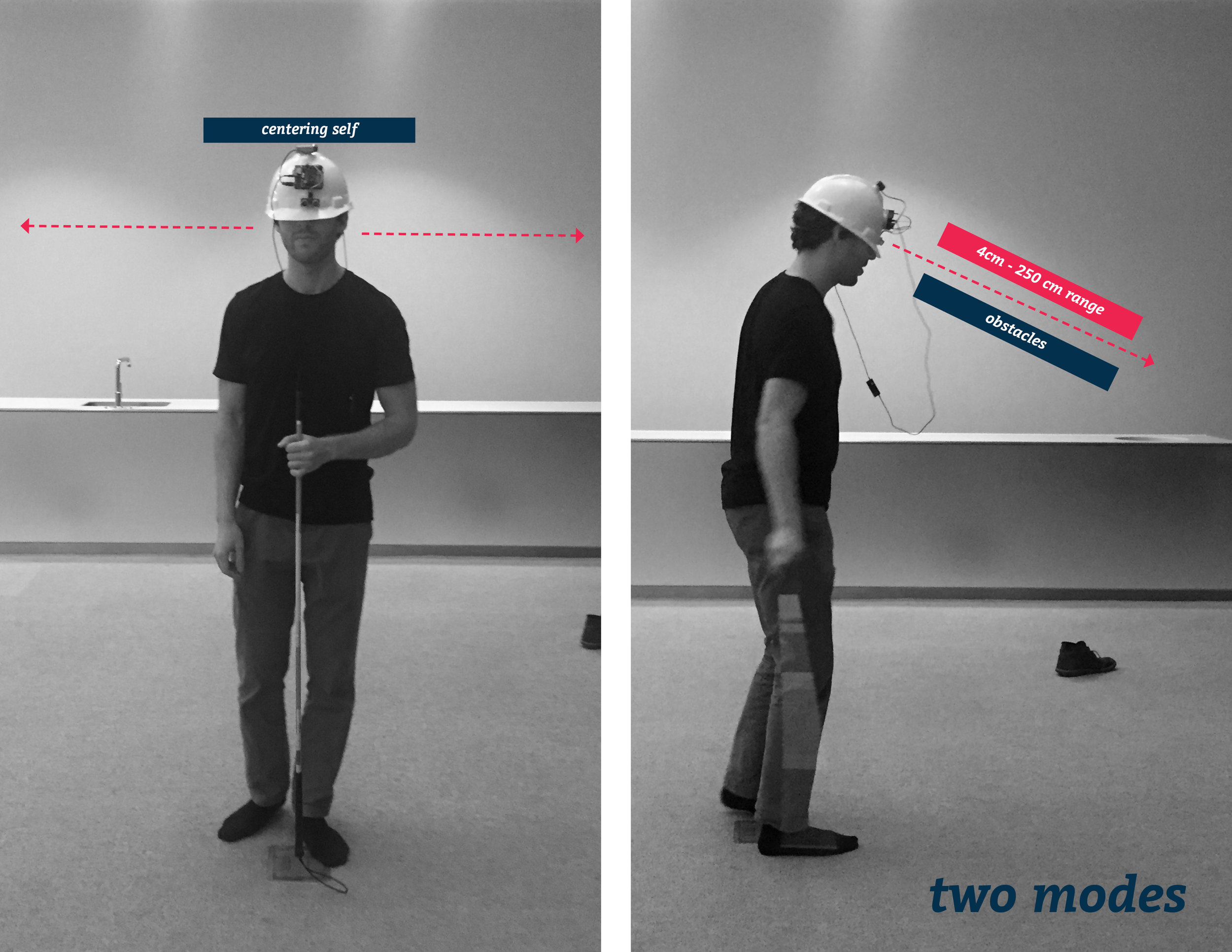

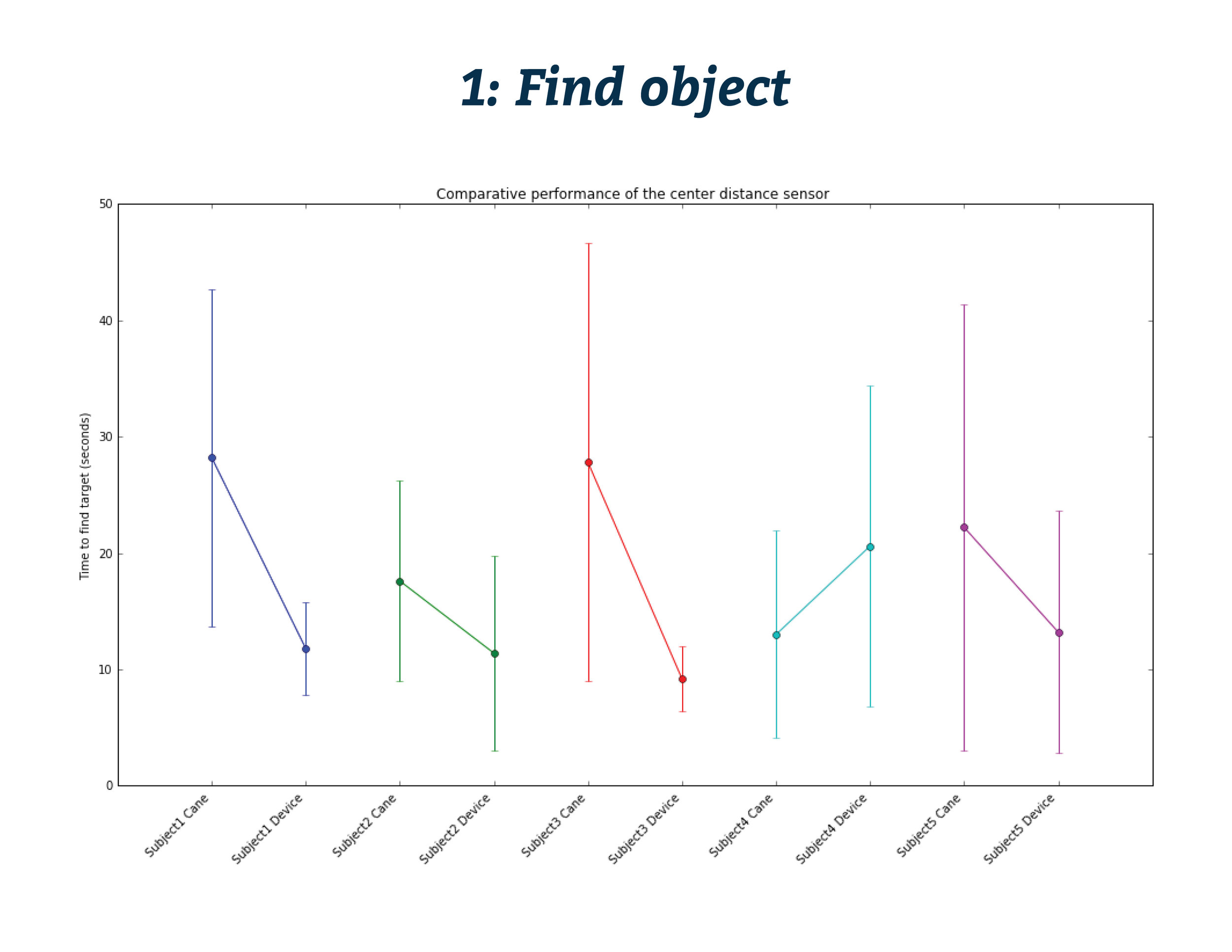

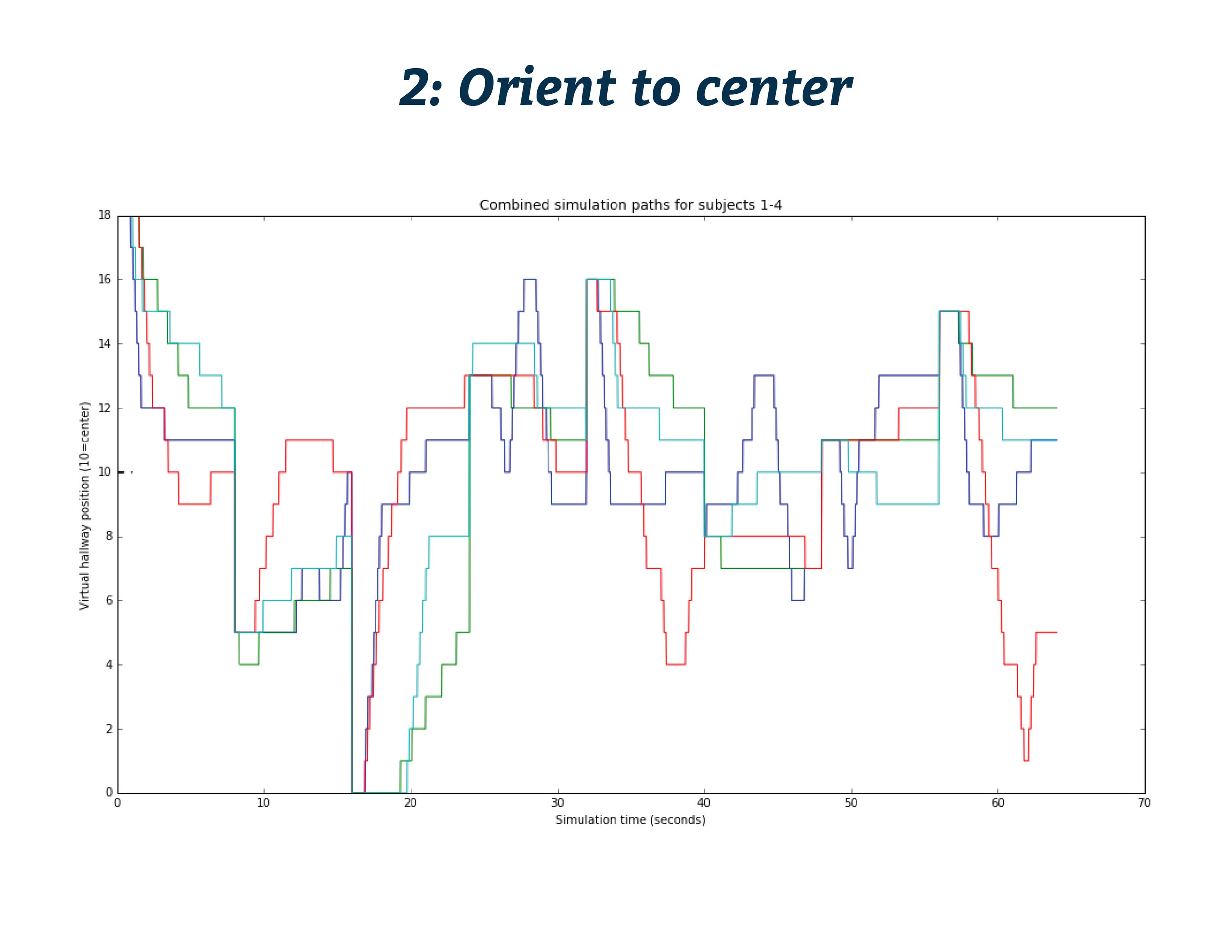

The ambition of this project was to explore the use of sound for augmenting the stream of spatial information. Specifically, this exploration focused on the situation where the necessary information is out of range of the cane. In the first task, we developed a method of object localization in the range of 4- 8 feet. In the second task, we built a device to assist in the specific navigation task of walking down a hallway. This is a representative task for other challenges of blindness, charting courses in real-world environments that are obvious to sighted people but have little clues for a blind person navigating with a cane.

Team: Daniel Goodman, Gregory Lubin and Tiffany Kuo - MIT Media Lab, Human 2.0 - Professor: Hugh Herr

Audio Demo

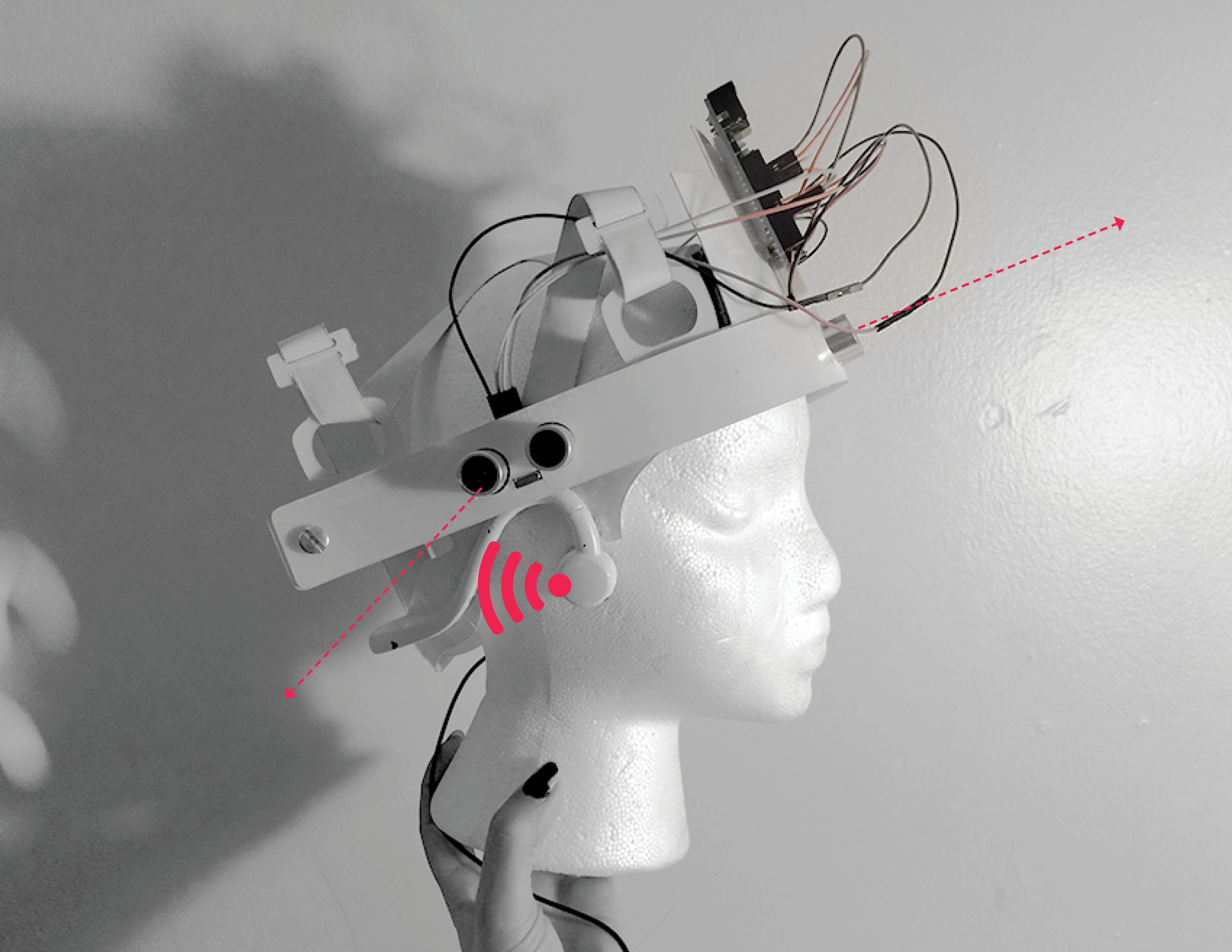

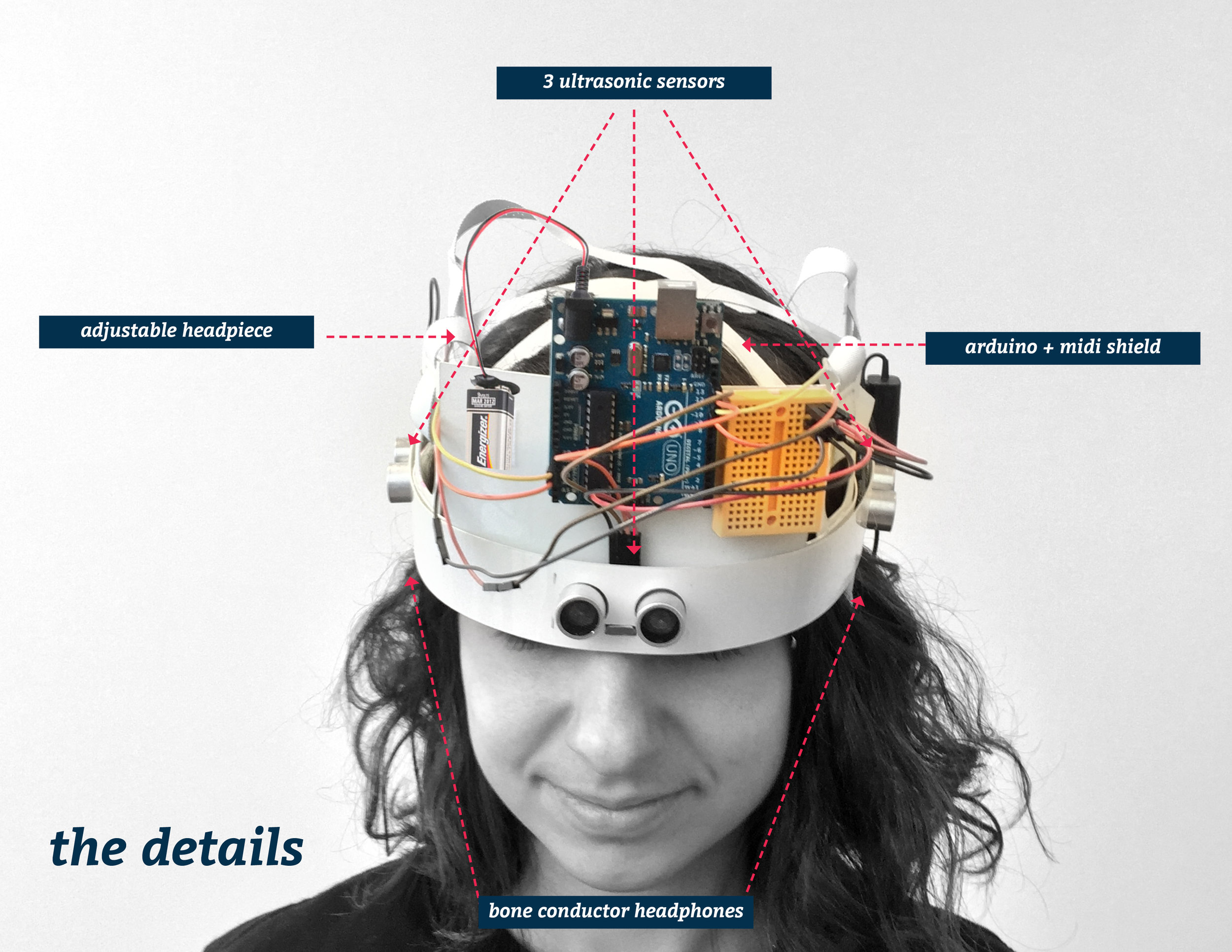

Final Wearable Assembly

System Design