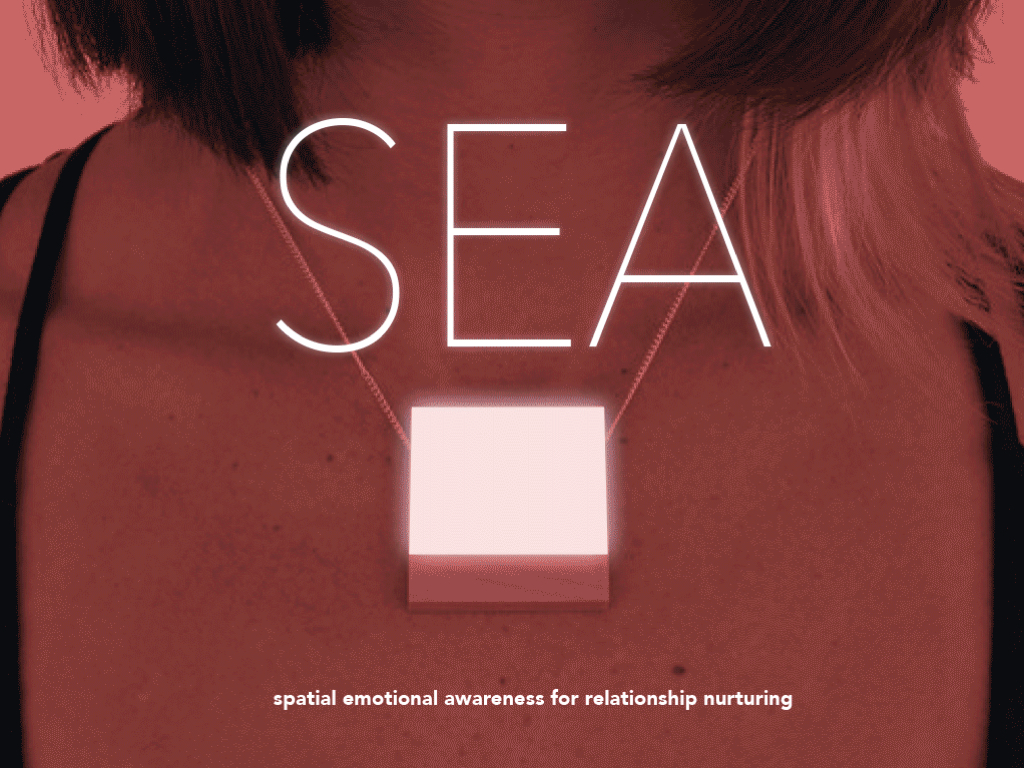

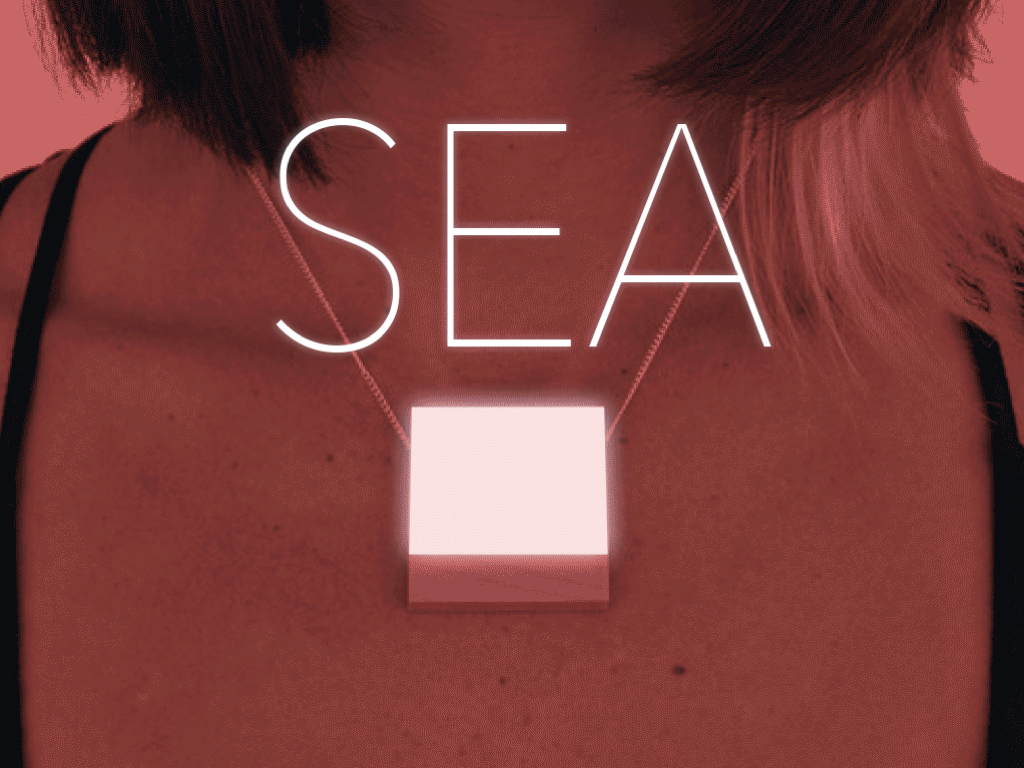

SEA: Spatial emotional awareness

interface design / design research / wearable

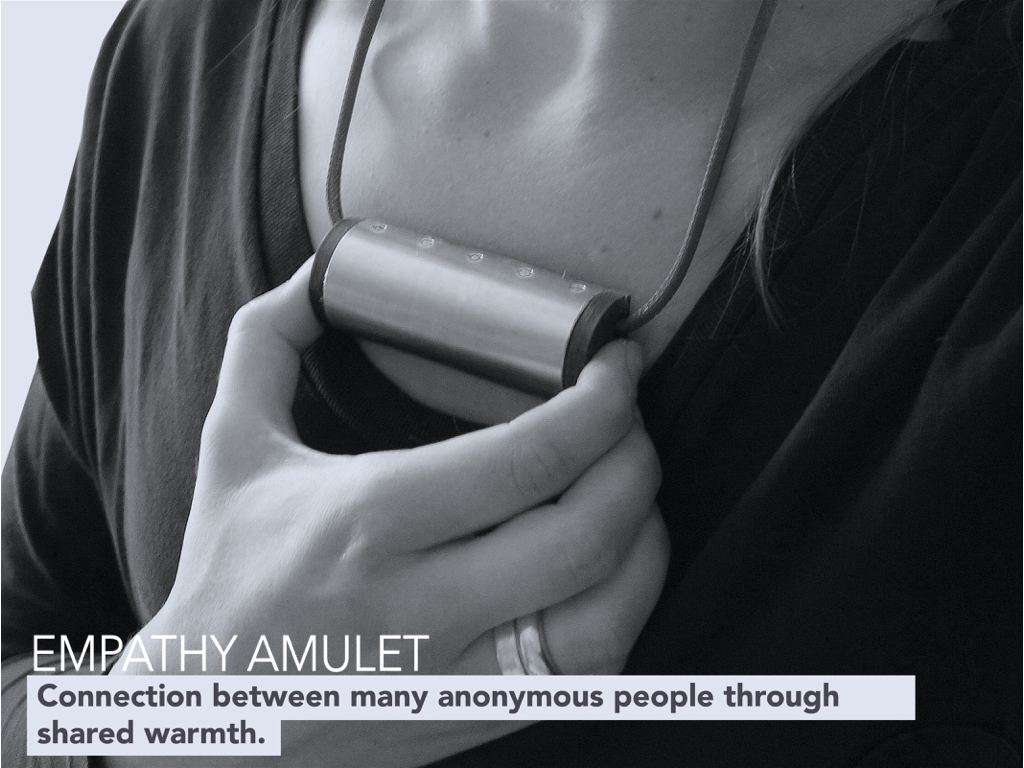

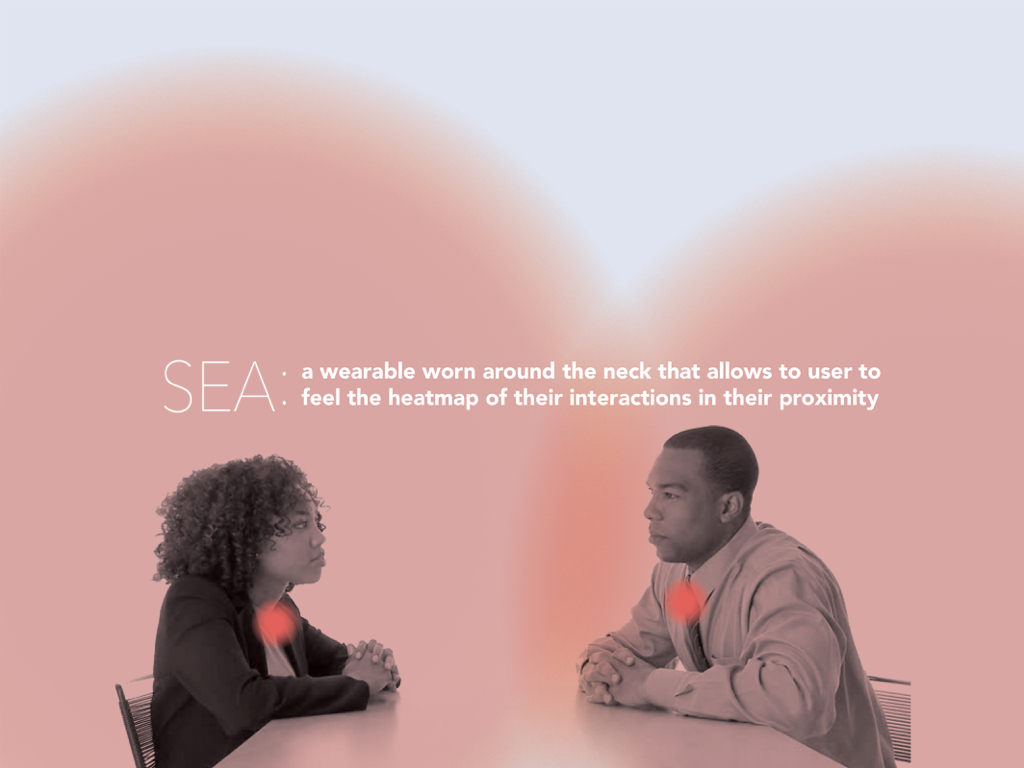

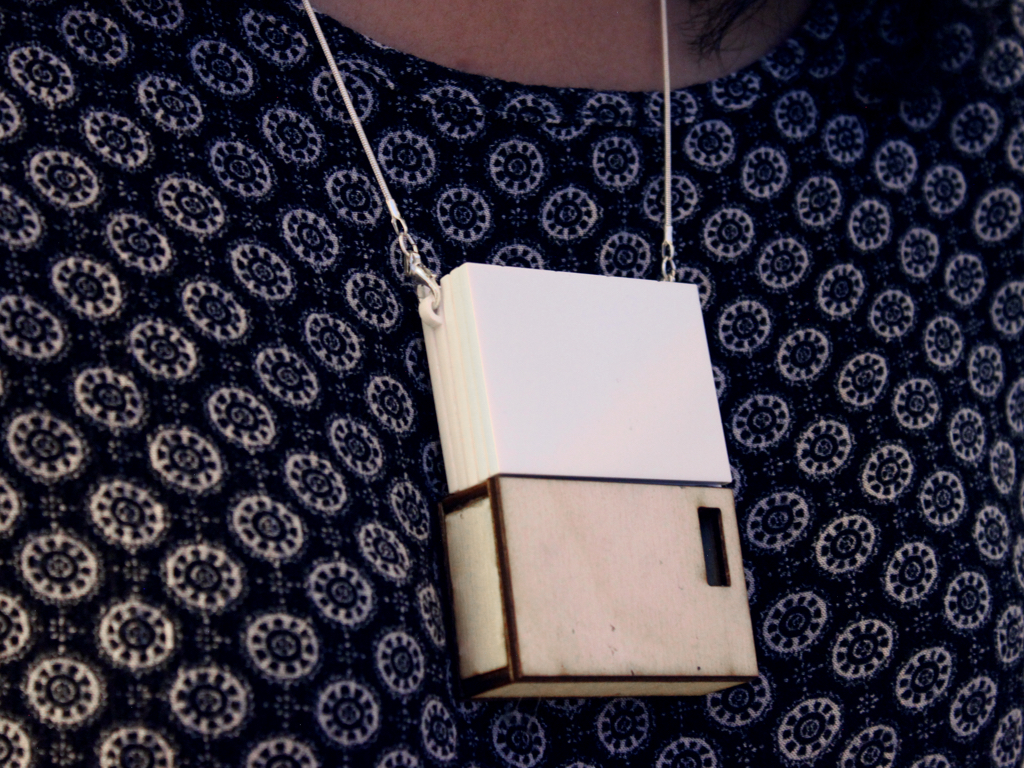

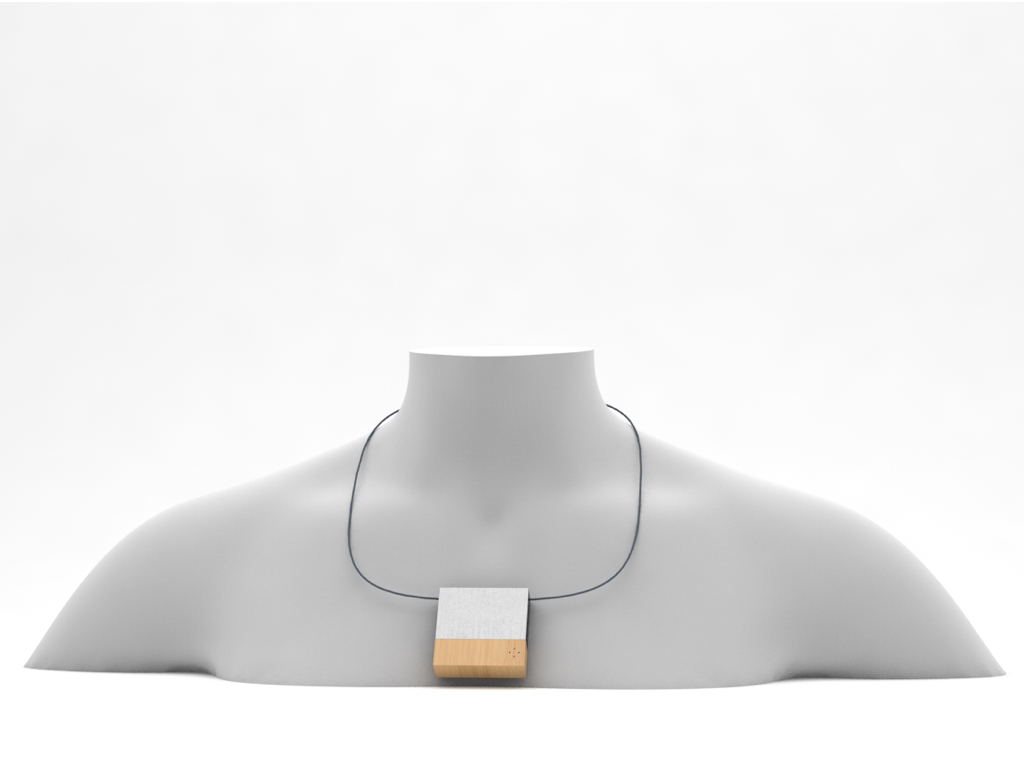

SEA is a spatially aware wearable that uses temperature differentials to allow the user to feel the heat map of their interactions with others in space, real-time. By examining a user’s emotional responses to conversations with people and by providing feedback on a relationship using subtle changes in temperature, SEA allows for more aware interactions between people.

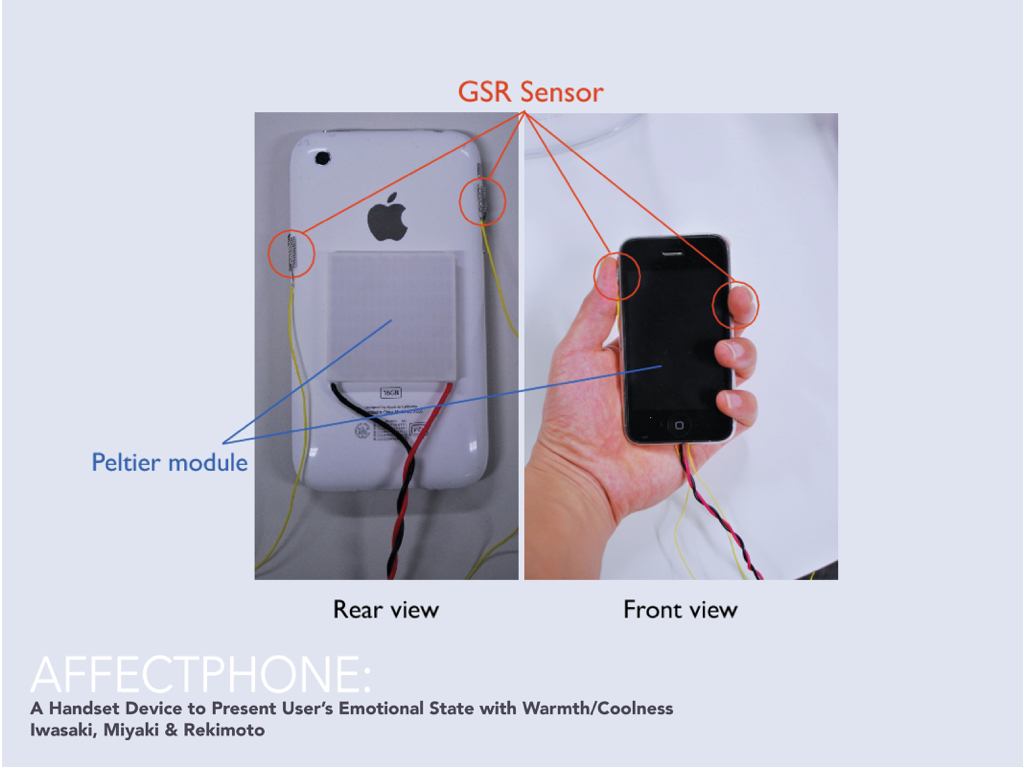

Emotional responses are analyzed and interpreted through the voice and the body. We used a peltier module as the base for the temperature change, and use BLE to get the location of users. An iPhone app was developed as well to allow users to get a more detailed view of how their relationships have changed throughout time (the app allows people to examine their emotional responses to people on a day-to-day level). Ultimately, SEA is a wearable that attempts to help people be more mindful of their own actions and responses in relationships and tries to foster and nurture healthier interactions.

MIT Media Lab | Instructor: Pattie Maes | Human Machine Symbiosis | in collaboration with Laya Anesu, Lucas Cassiano, Anna Fuste, and Nikhita Singh

Spatial Experience

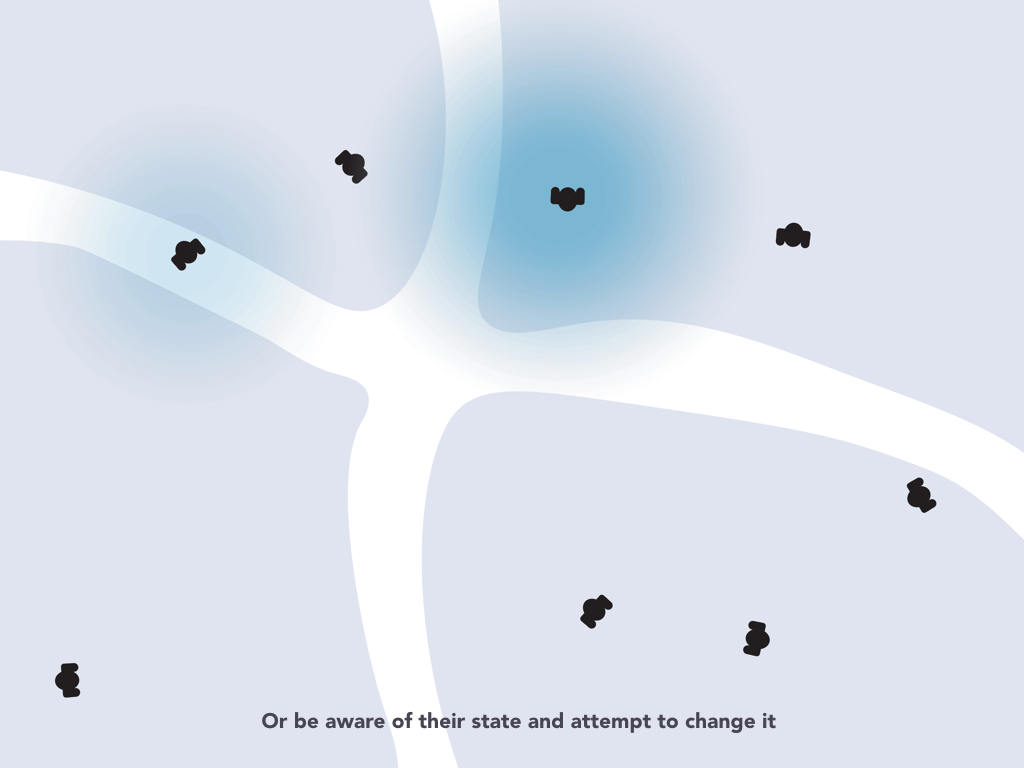

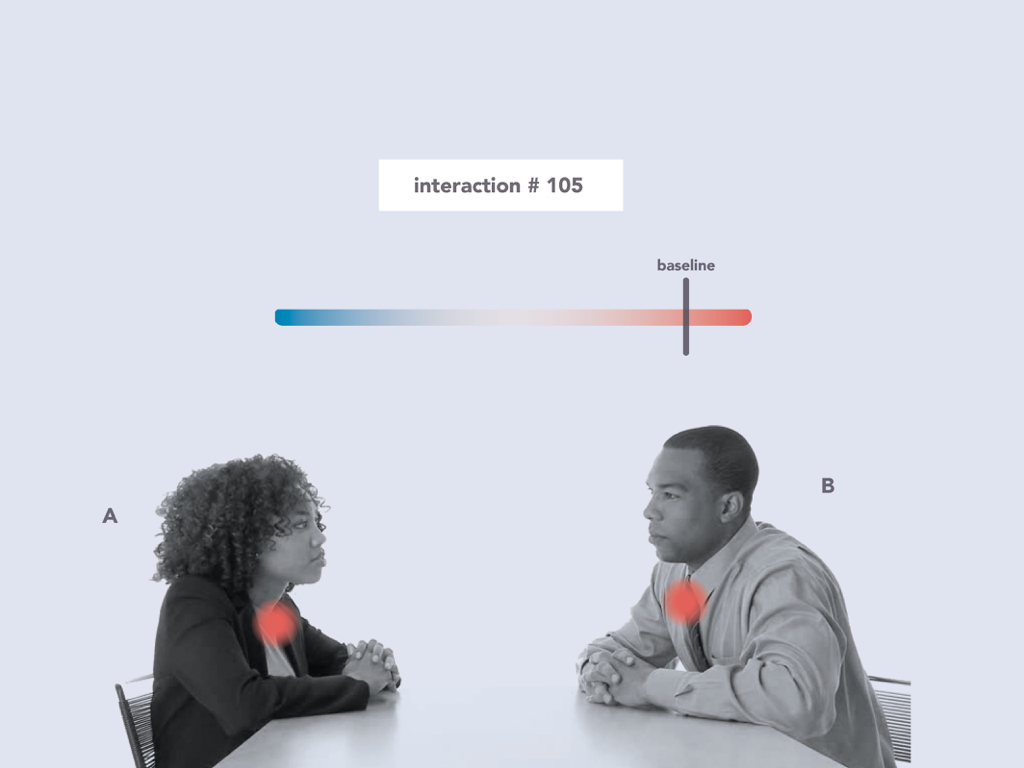

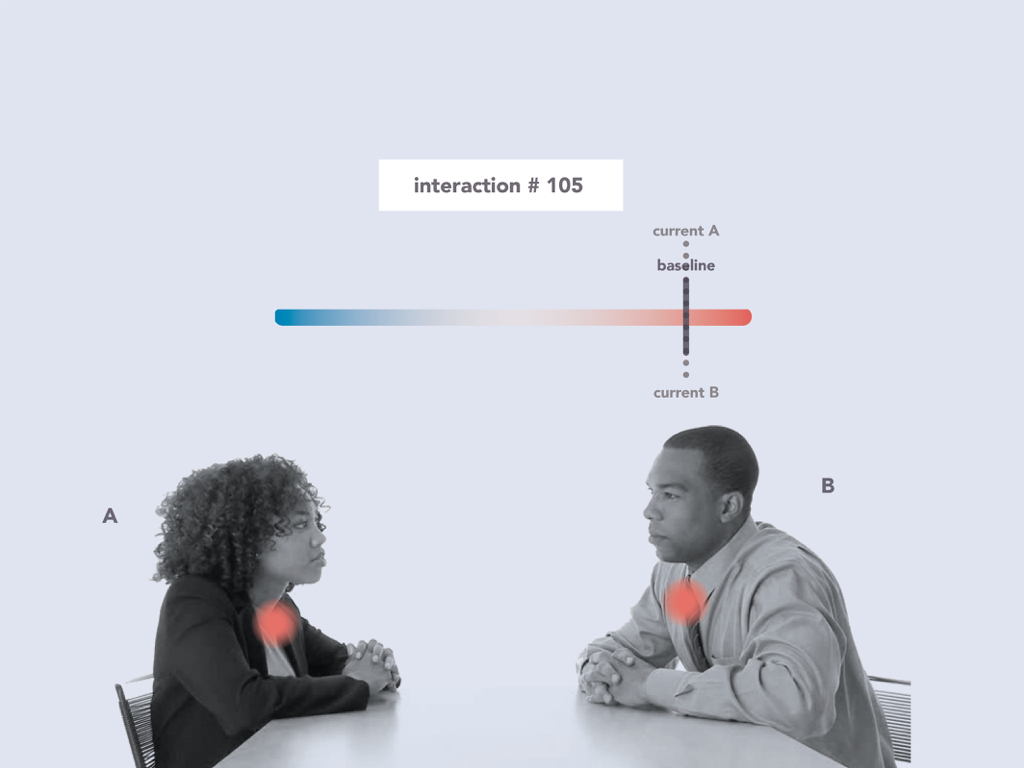

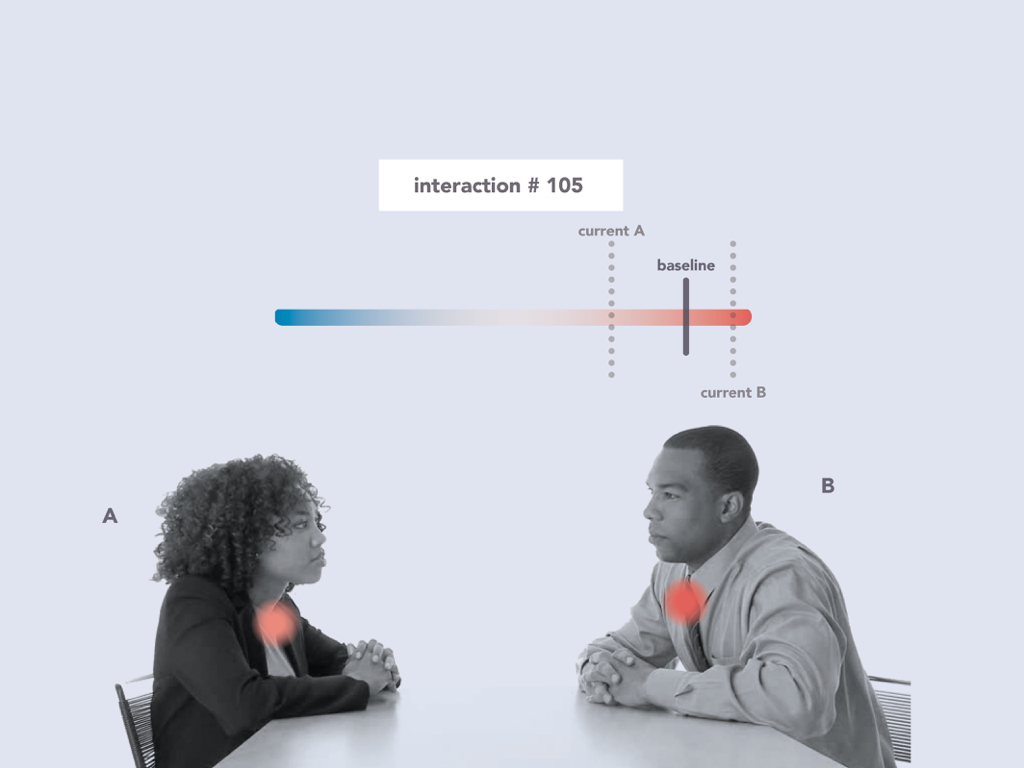

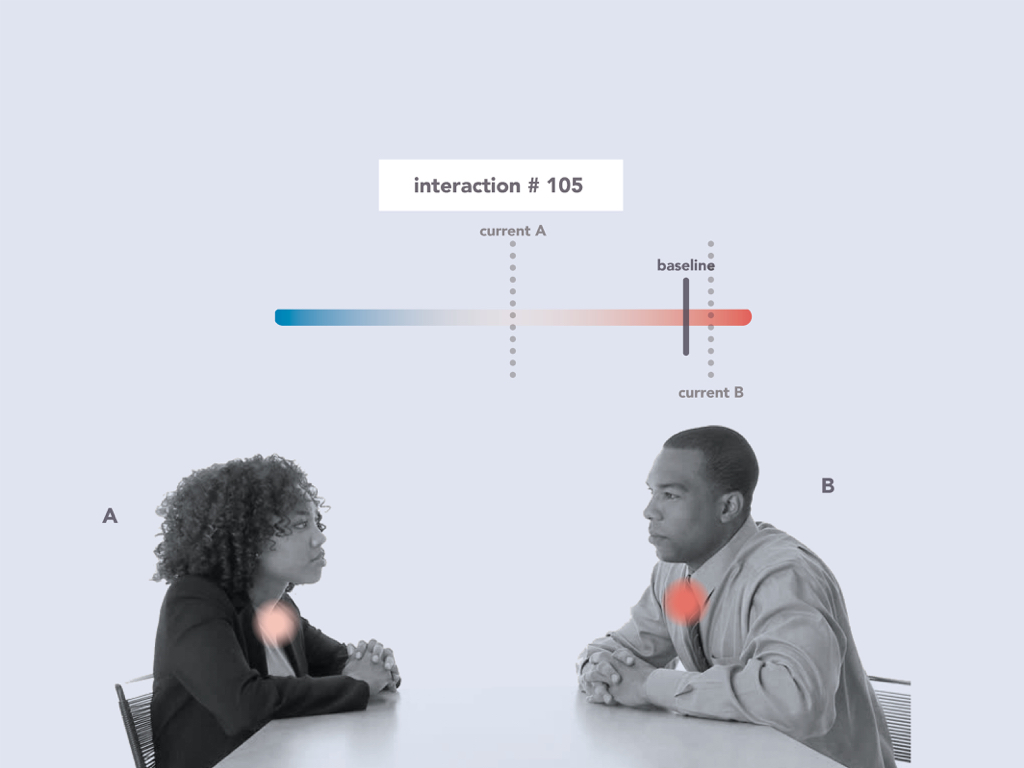

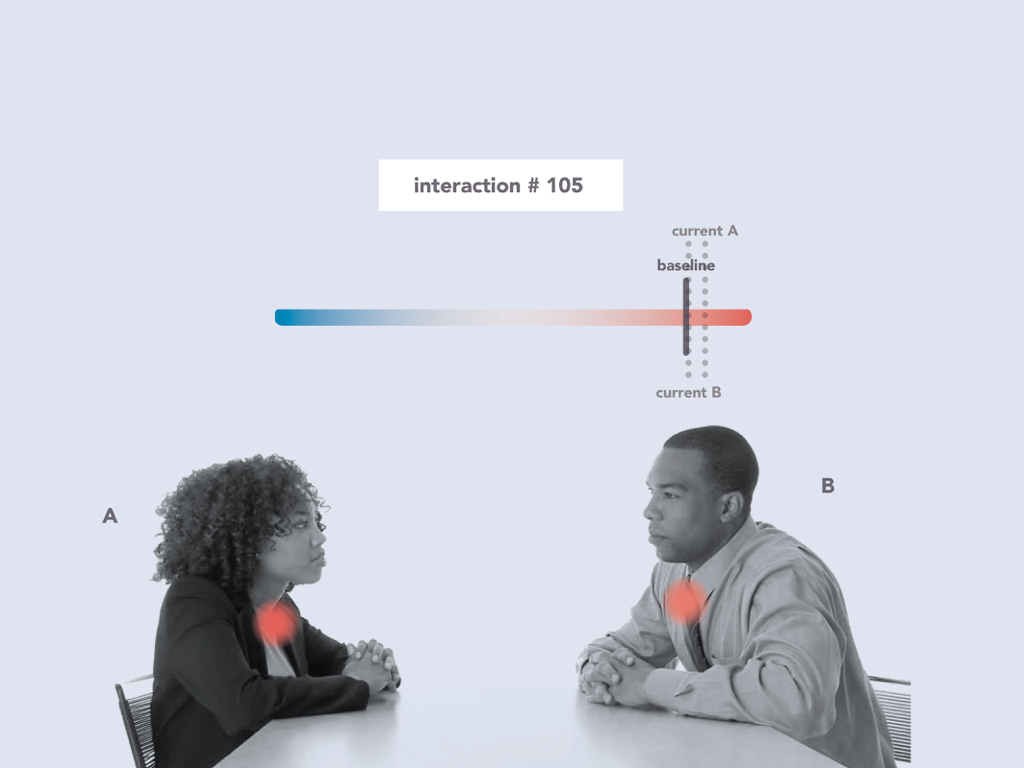

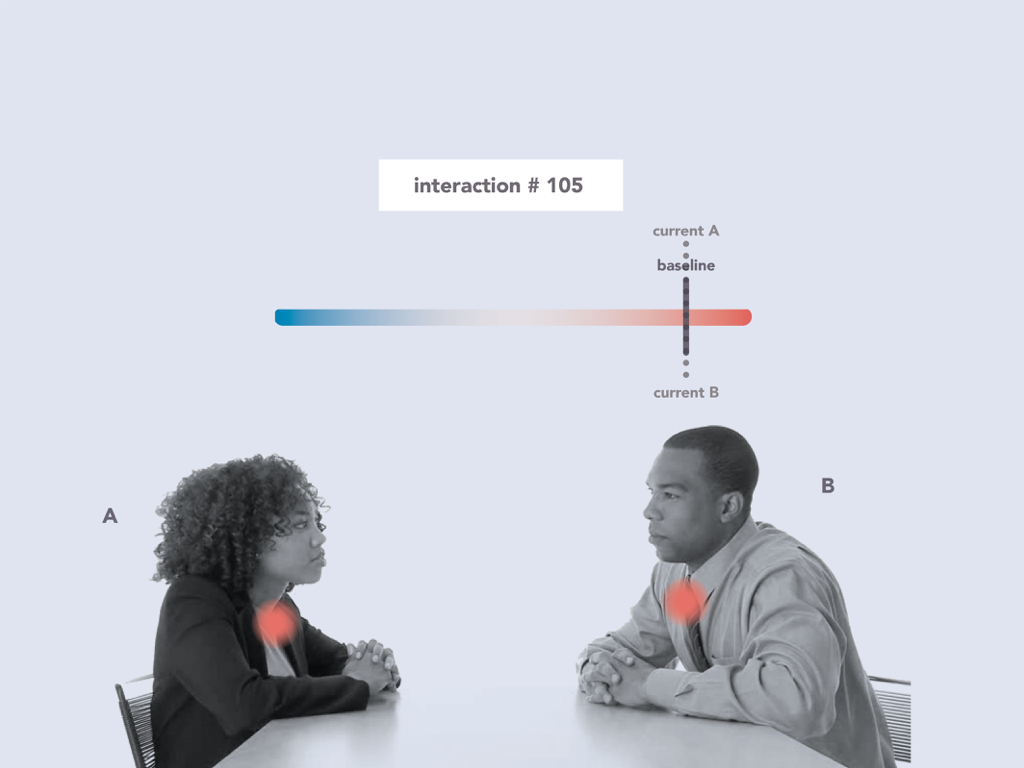

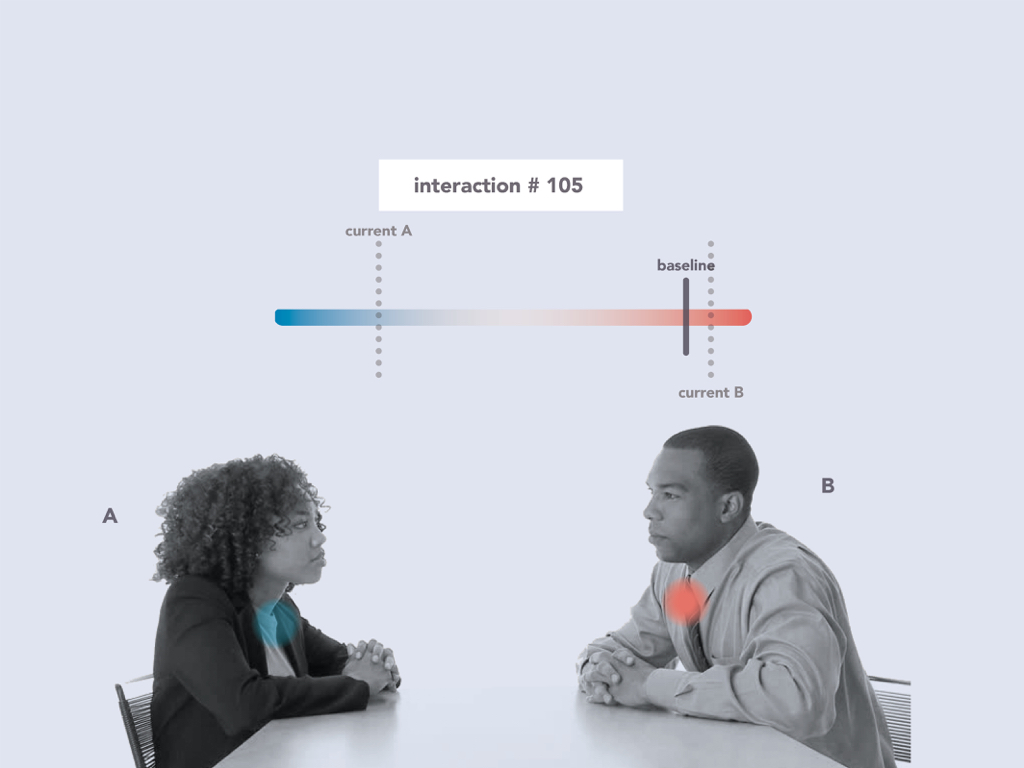

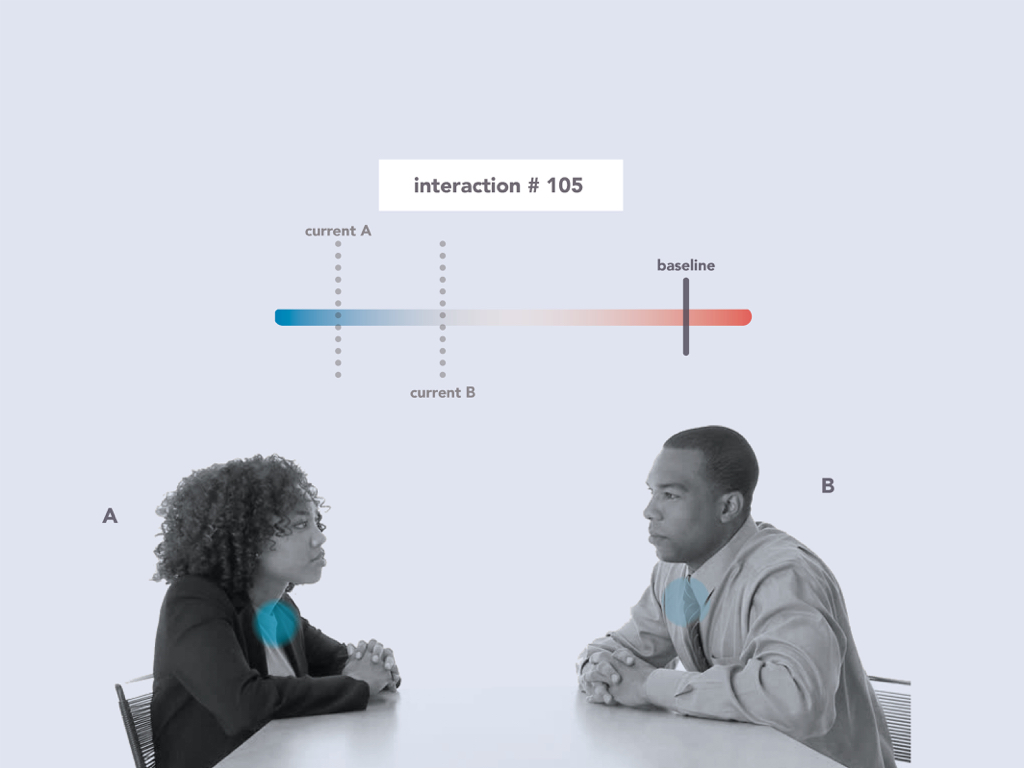

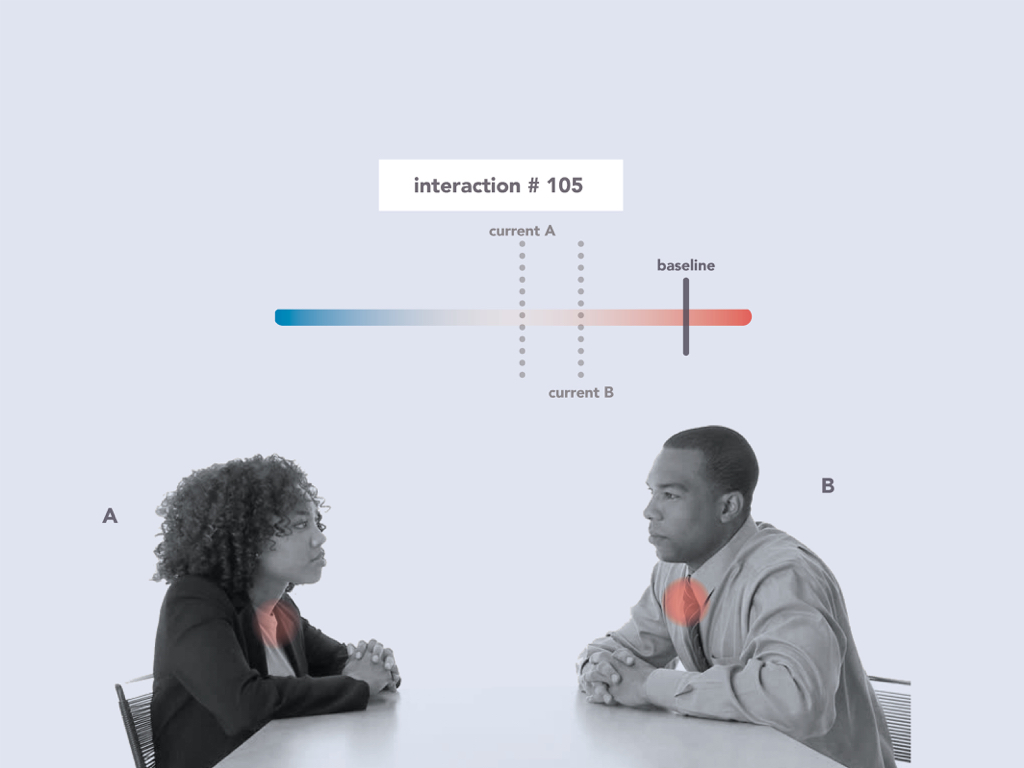

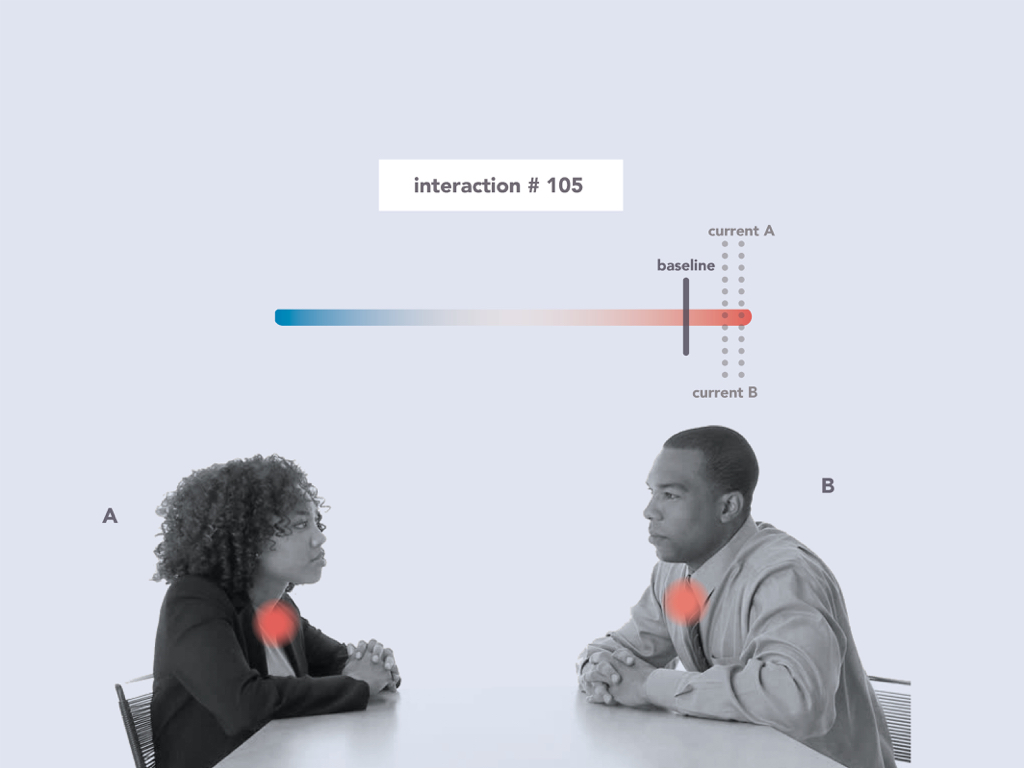

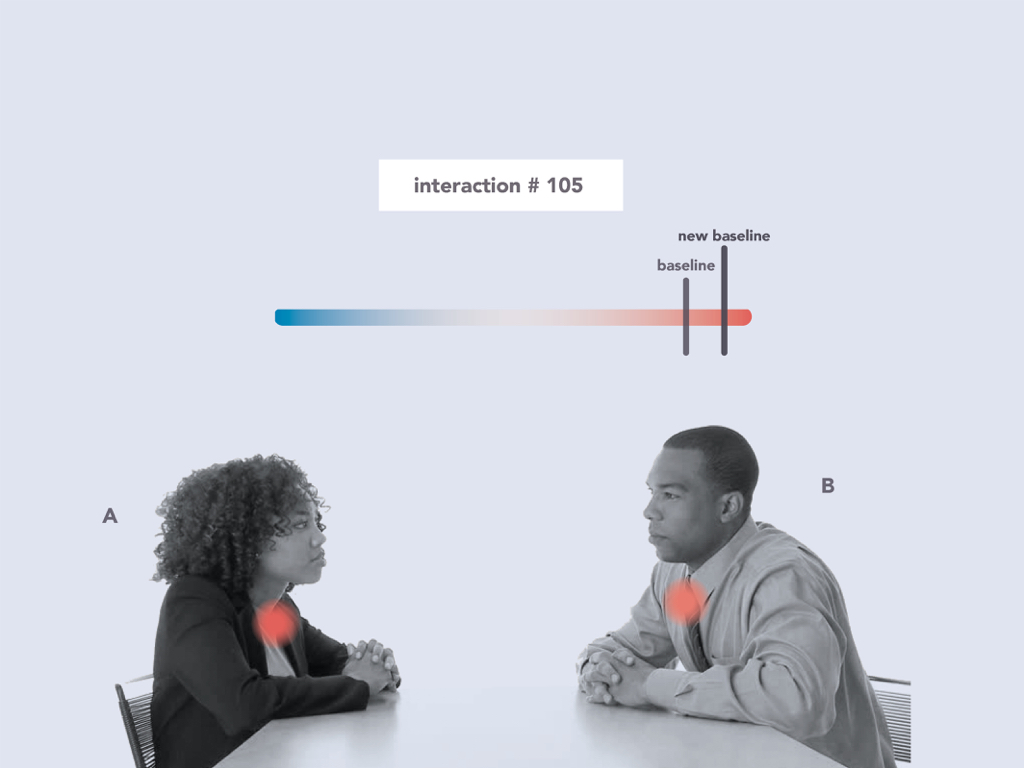

SEA’s capabilities allow the wearer to engage in two scales of awareness; the state of a relationship baseline, and real-time conversation. Each one of these scales plays a role in providing the user useful information about relationship dynamics. One is passive, and merely feeling the map of current states, and the other is active, listening and analyzing in real-time conversational data.

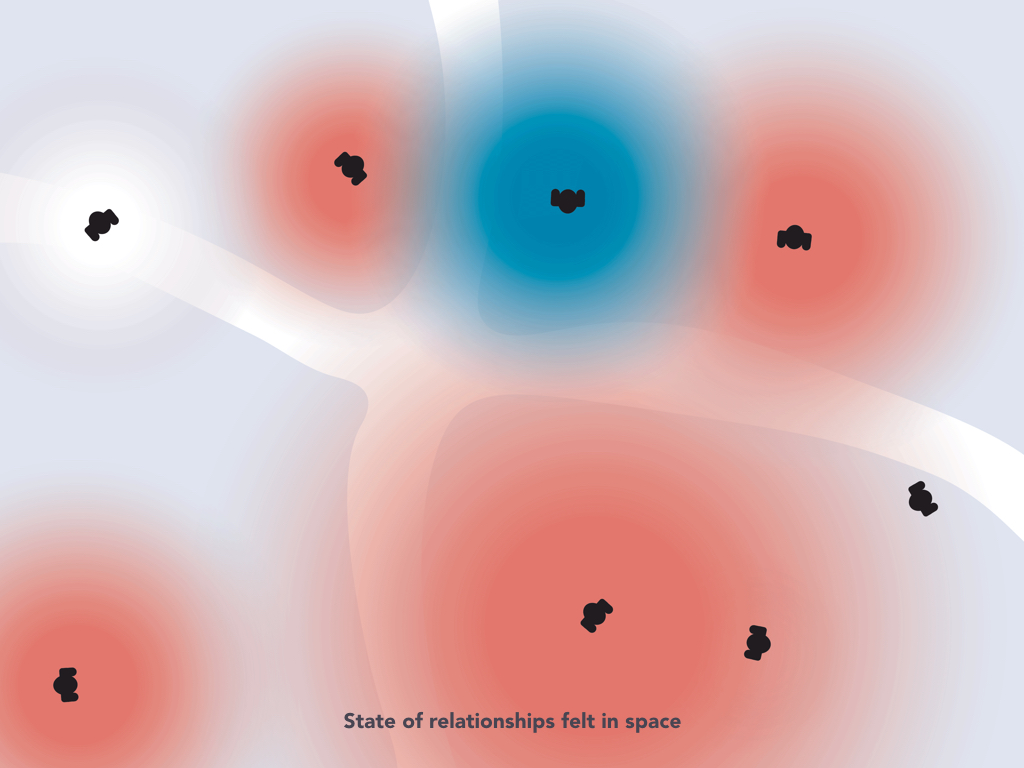

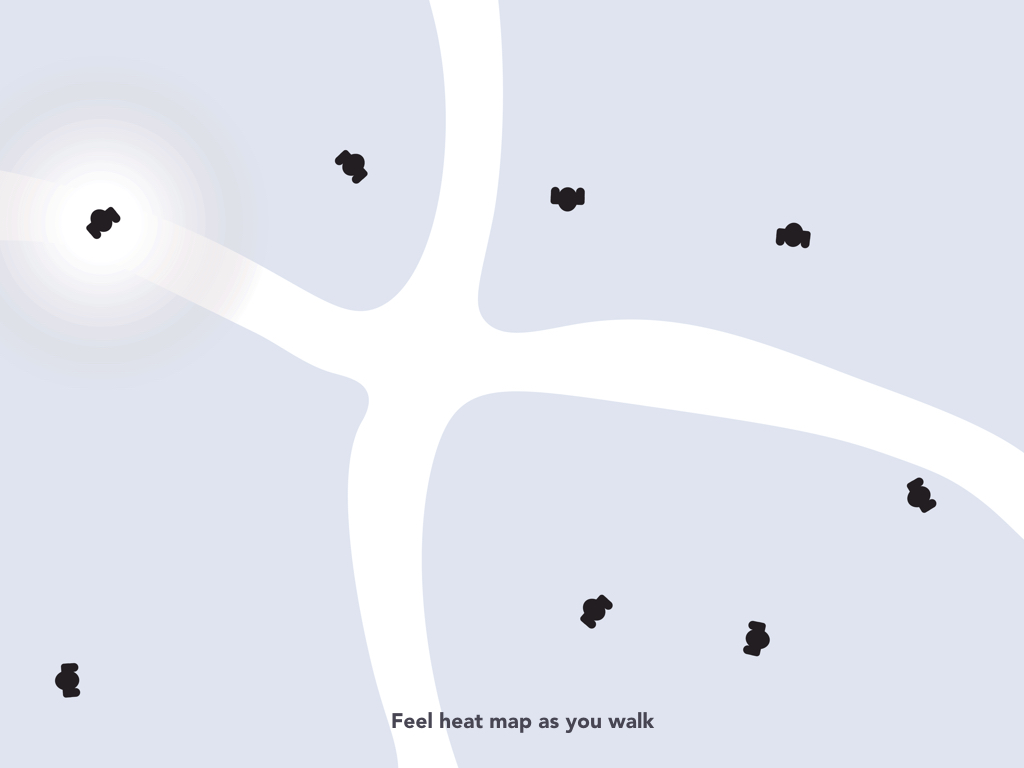

SEA affords the wearer the opportunity to experience a heatmap of their relationships while they are navigating through space. They can feel the warmth of a good relationship around them, and can also be aware of the presence of a negative influence in their space. In environments where relationships are undeveloped, and still in the early stages of their emergence, the user will feel no temperature difference from room temperature. The device will not provide additional heat or coldness. But in spaces where there are more fully formed relationships, the wearer will feel a more defined temperature gradient that deviates from the neutral room state. This lets the user become aware of the direction of the interactions they have in a particular space. SEA becomes a thermal navigator for physical space.

In terms of instantaneous personal relationships, you can feel when a conversation is going really well, and developing past its previous state, and you can feel when a conversation is going back.

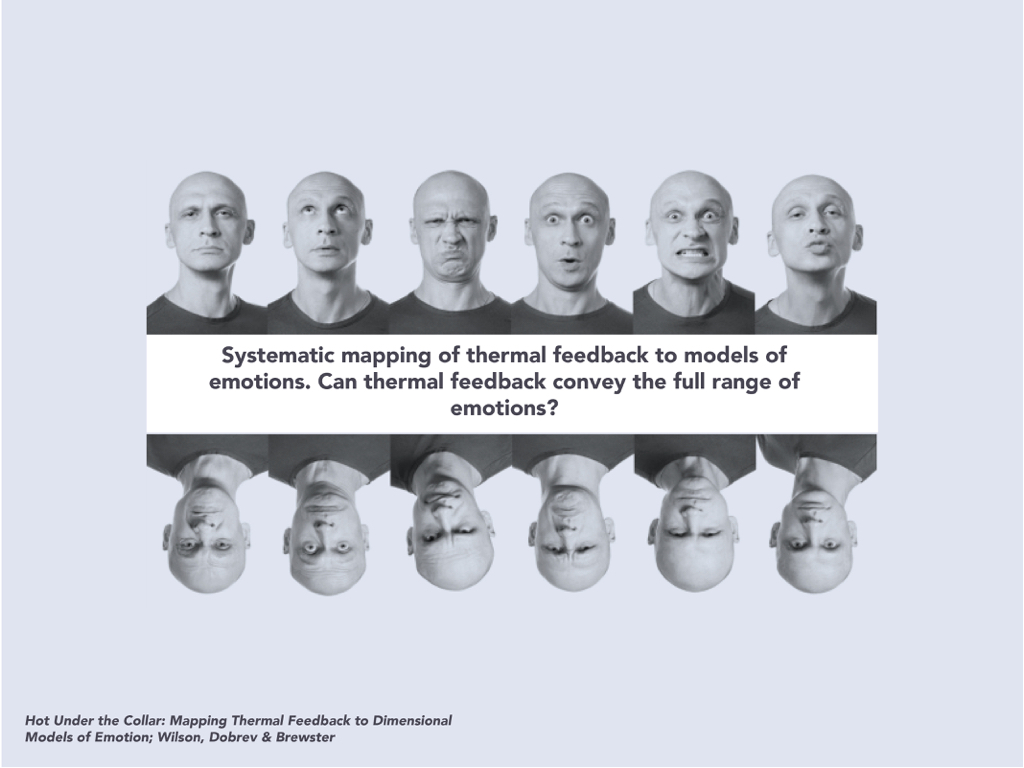

We were trying to understand if temperature could allow for both internal awareness (emotion) and external awareness (social and spatial). Instead of trying to load our wearable with all possible types of feedback (output), we attempted to create a wearable that was very subtle in the way it revealed information to a person. As discussed earlier in the related work section, the experience of temperature–heat and coldness– can impact how people behave in interactions with others. Something as subtle as holding a normal, iced-coffee as opposed to a warm cup of coffee can change how warmly someone behaves when talking with another person. We wondered if we could use temperature as a translator of positive feelings and emotions or negative feelings and emotions. We wanted to focus on subtle cues that could hint at something being different in a relationship, as opposed to outright letting someone know: “your relationship is suffering” or “your relationship is wonderful”. Relationships are complex: there will always be a mix of hot and cold feelings. Using a slight difference of temperature allows us to scale the perception of a relationship over time. This is while tuning the general feeling of the relationship throughout time. We wanted to emphasize the time element of the relationship and used temperature as a way to notice subtle deviations in the relationship that had been something over time

Hardware Build

In order the keep the project complexity low and also make it possible to use the same circuit structure (data and electrical flow) to fit inside the SEA form factor presented here, we used one microcontroller (Arduino Micro Pro) receiving data from a Bluetooth Low Energy module (BLE), the last one is reading received signal strength information (RSSI) from other nearby BLE enabled devices, e.g. another SEA device. One of the main issues is power management and current flow. For this reason we added couple voltage regulators on the system, to supply 5V and 3.3V for the Microcontroller and BLE Module respectively. The Peltier Plate drains too much current (around 2A), which means it can’t be controlled directly by the Microcontroller I/O pins. Therefore we built a temperature controller system composed by MOSFET transistors driving a H-Bridge circuit.

The device uses a thermoelectric cooler and heater component based on Peltier effect, creating a heat flux between the two sides of a plate. Using a H-bridge circuit driven by MOSFET transistors we can control the heat flux, therefore the device’s temperature.

As explained before, the distance tracking system is based on Bluetooth Low Energy Modules, i.e. inside each SEA device there is a BLE module that can work as a scanner or beacon (advertising BLE packages with informations like device name, type and manufacturer). Based on the exchange of BLE information the device can calculate the RSSI (received signal strength information – in dBm –power ratio in decibels (dB) of the measured power referenced to one milliwatt).

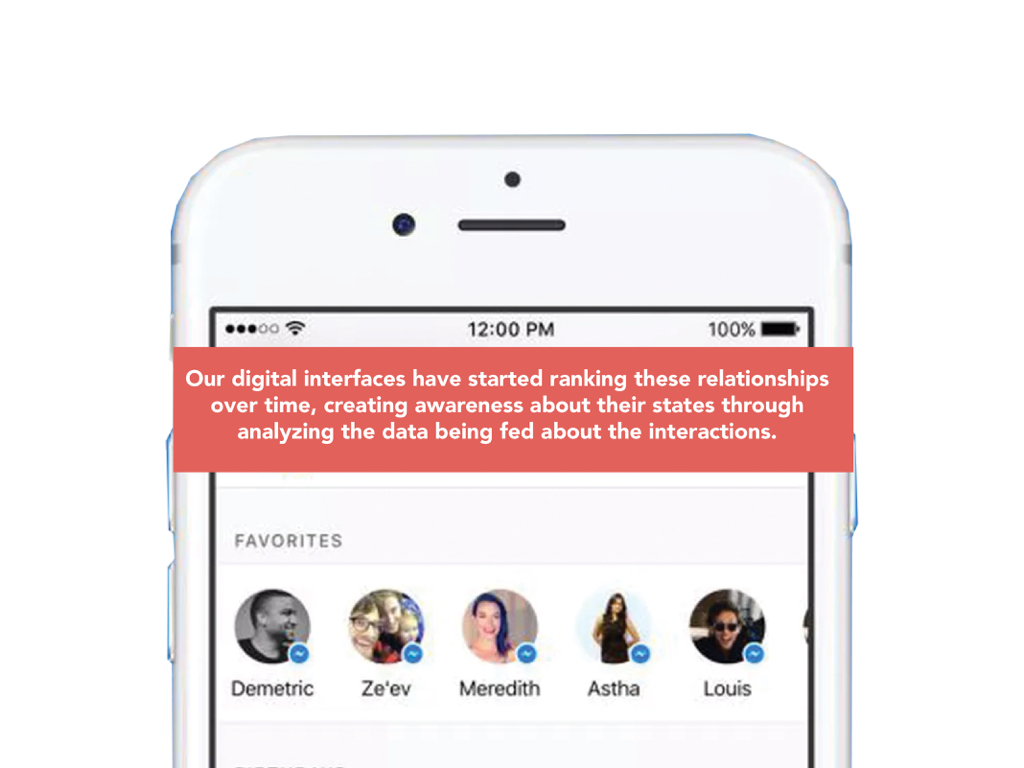

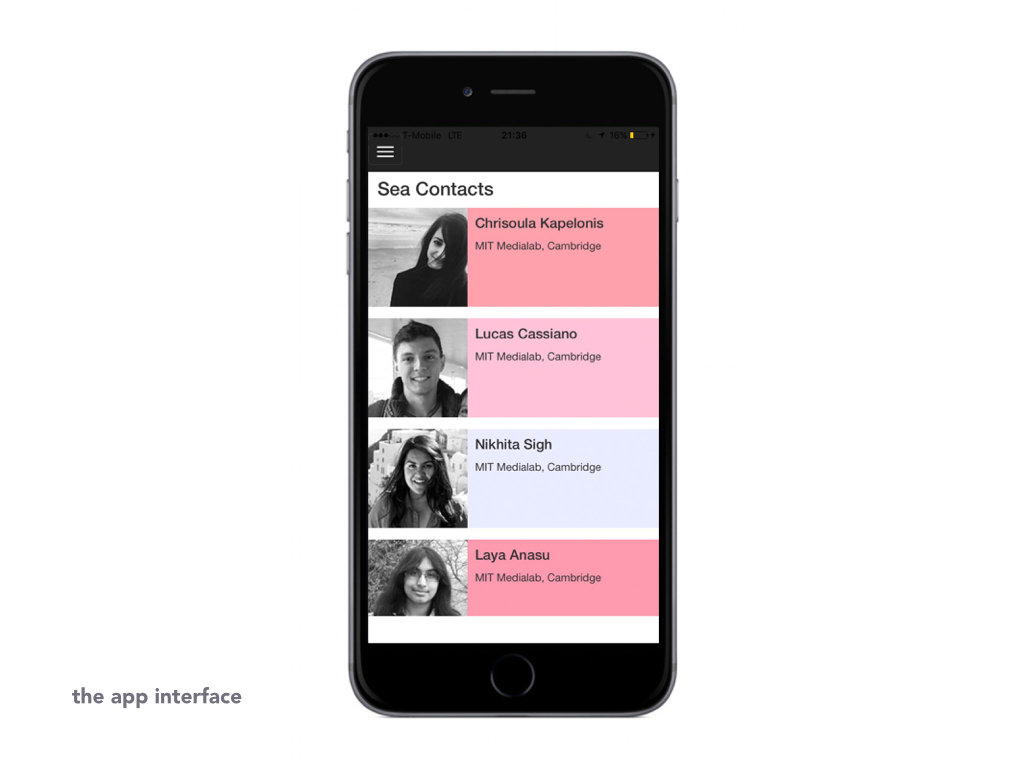

Software: Mobile Interface

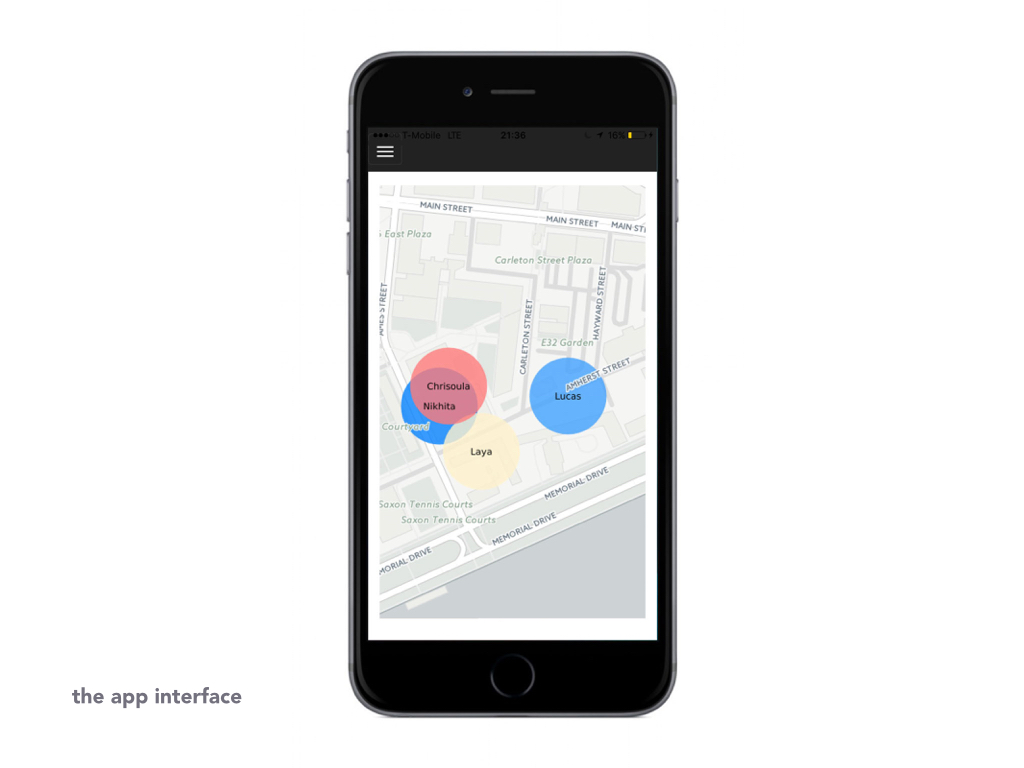

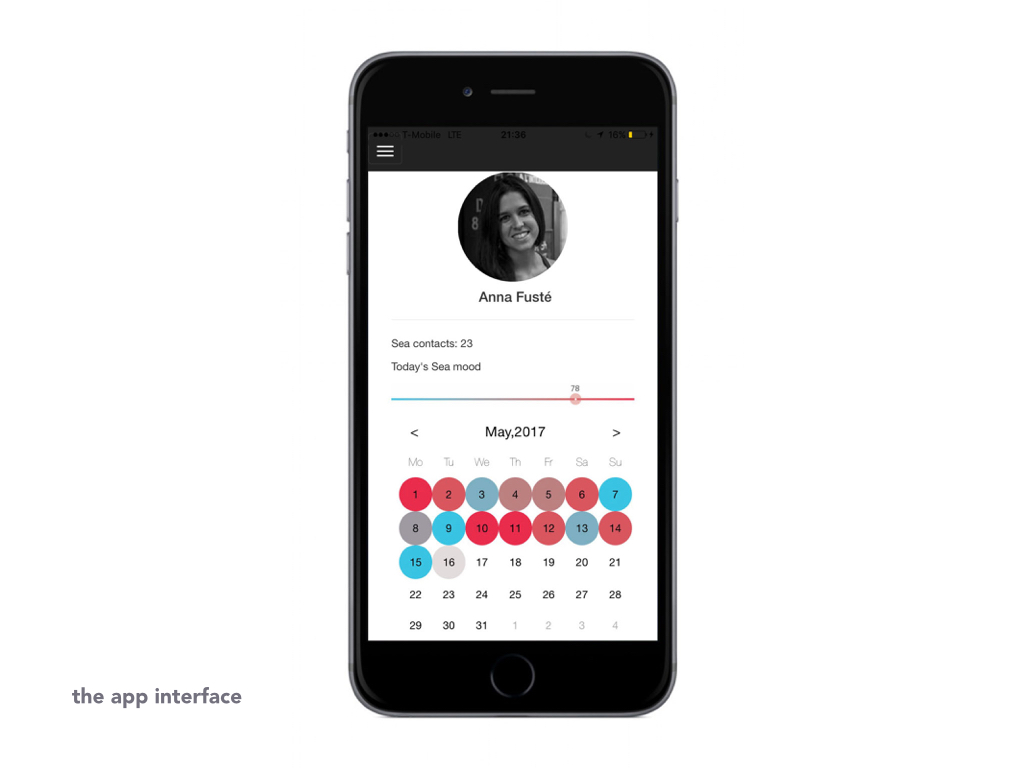

SEA is controlled by a mobile phone application where the user is able to configure and visualize the interactions with others (a). The user can check his/her profile where a display of the current day SEA level will be displayed. The user is also able to see the interactions in time on a calendar (b). The calendar shows an evolution in time of the SEA interactions. A list of contacts is displayed on the Contacts section (c). All the users are listed and they will be displayed with a background colour according to the past interactions that the user had with each one of them. If the interactions with a user were not friendly, the user will be displayed in blue, if the interactions were warm interactions, the user will be displayed in red. It gives an average of the temperature felt with each one of the subjects for all the interactions had to that moment in time. Finally, a map of the spatial location of each user is displayed (d). The map also displays the colour of the average temperature per user and their influence in space around the main user.

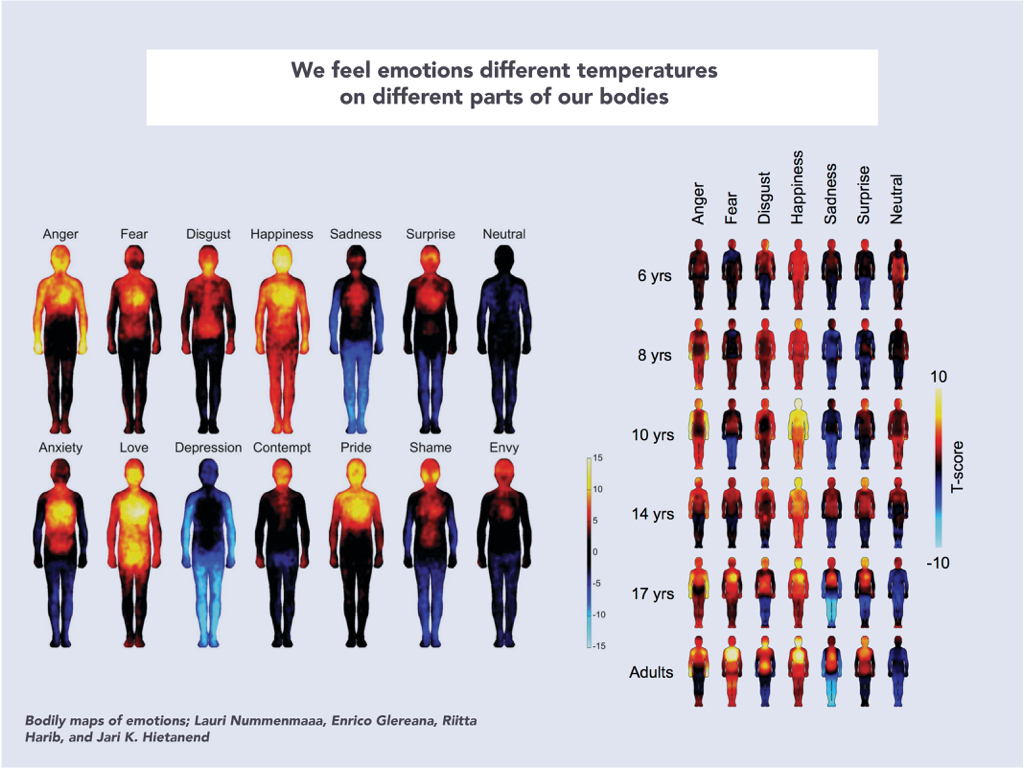

Detecting Emotions

The on-the-body form factor of SEA affords the ability to detect multiple inputs as a means to understand the emotions of the user. Both speech data and physical data (i.e. skin conductance and heart rate) are meaningful metrics for detecting the emotional state of an individual in an interaction. In addition to these modalities, semantic analysis can also be used to understand the digital footprint of a relationship through messages. Affective analysis using visual inputs from a camera could also be useful, but requires the user to be visible from a camera.

For the current prototype of SEA, we explored and implemented the ability to derive a subset of features that can be utilized to build a unified model for interpreting user emotions.

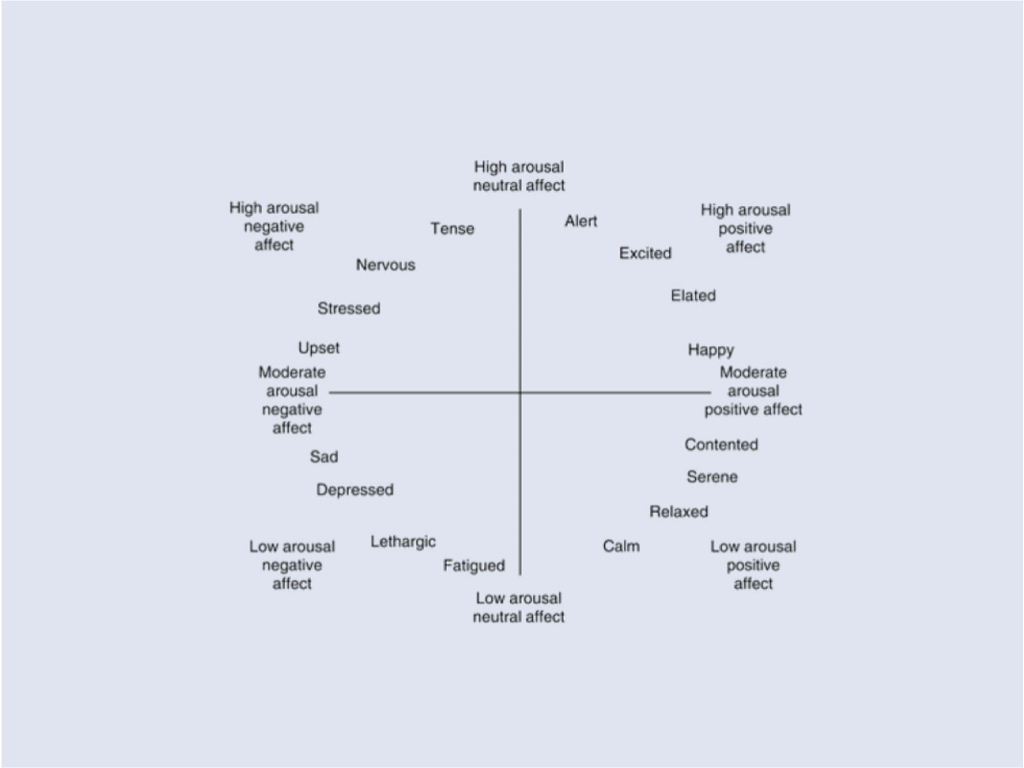

- Valence & Arousal are powerful mechanisms for mapping emotions. We utilized the Python library pyAudioAnalysis to extract values for both valence and arousal using a SVM (support vector machine) classifier

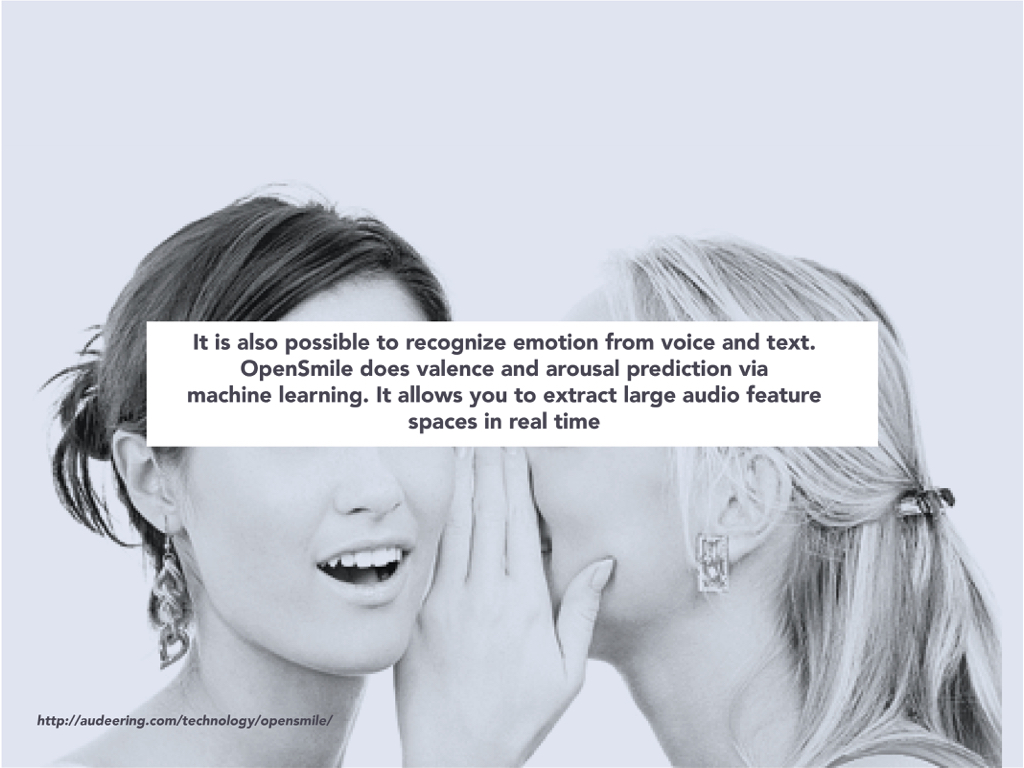

- Emotional Prosody is characterized by fluctuations in pitch, loudness, speech rate and pauses in the user’s voice. We utilized OpenSmile to explore how to extract these features programmatically from audio files.

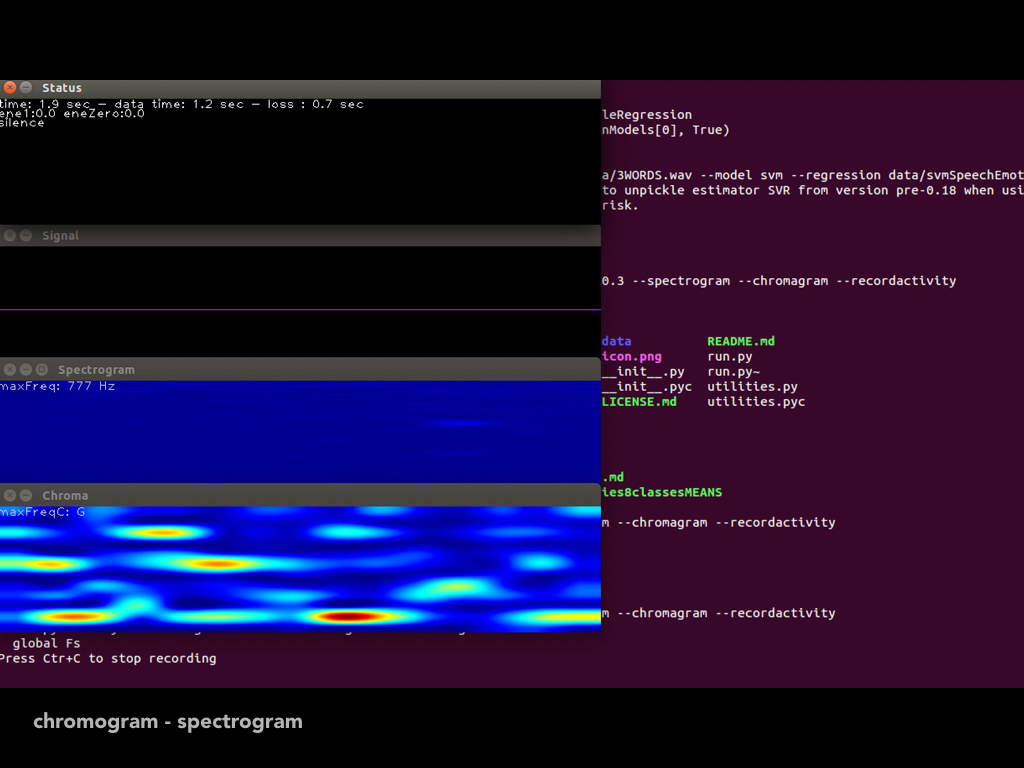

- Spectrograms have recently been used with Deep Convolutional Neural Nets in order to do speech emotion recognition. We utilized the Python script paura to generate and play with spectrograms of sounds in real time.

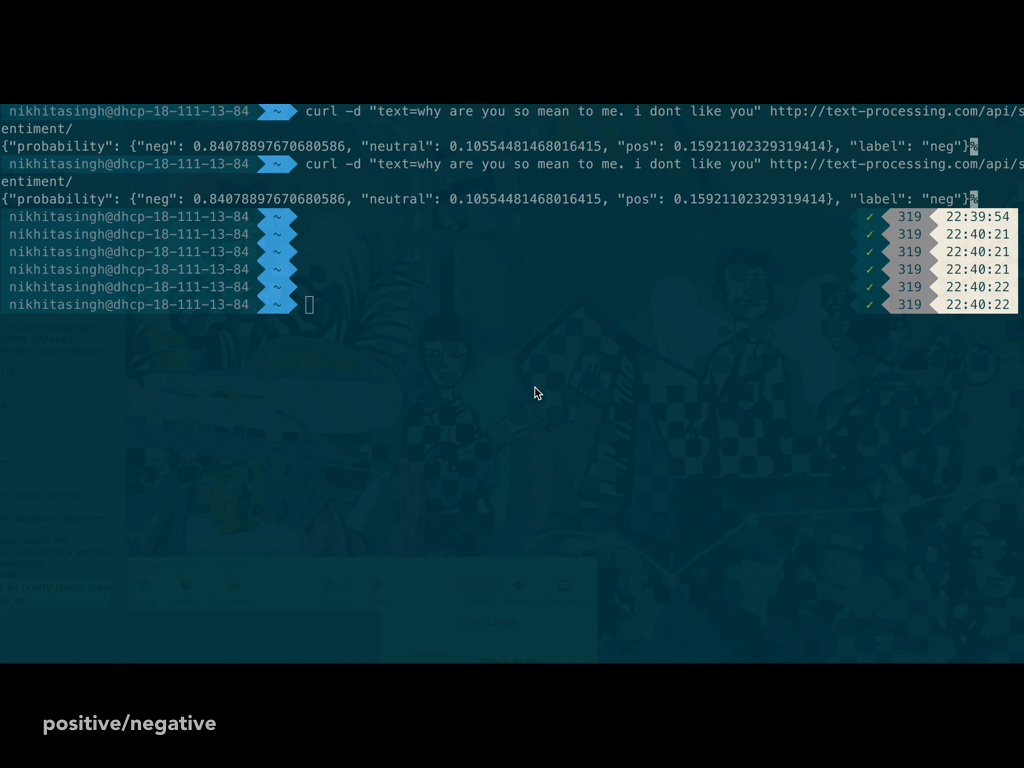

- Semantic Analysis can be used to process interactions over text including messages and Facebook. We also explored the ability to classify positive and negative values given a snippet of text. Enabling this at a larger scale to go through message blocks could be included in a future iteration of SEA.

For more information about the project, please visit the Project Page.